The World Wide Grid

By Jeff Schneider

Abstract

This article previews the

coming of age of the World Wide Grid. It identifies what the Grid is from a

technical view as well as the benefit that business users will reap. Adoption

curves and challenges are also addressed.

Terms: “World Wide Grid”, “The Grid”, “InterGrid”,

“IntraGrid”, “Software as a Service”, “Service Oriented Enterprise”, “Web

Services”, “Service Network”, “Business Services”, “Technical Services”, “Web

Service Provider”

Overview

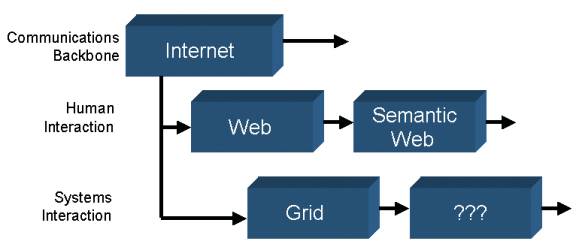

The World Wide Grid1

(AKA, WWG or “The Grid”) is the name for a new network that sits on top of the

Internet. This network complements the World Wide Web (WWW) by providing

structured data services that are consumed by software rather than by humans.

The Grid is based on a set of standardized, open protocols that enable

system-to-system communications. The collection of standards is often known as

“Web Services”. However, we will learn that it is the ubiquity of these web

service protocols that enable the creation of a network. And, like all valuable

networks, it will be subject to Metcalfe’s Law, which states that, the growth

rate and utility of the network will expand exponentially.

Evolution

Before we dig into the Grid,

it is worthwhile to look at the not-so-distant past. Recently, our

computer-based networking has been dominated by two primary incarnations,

namely the Internet and the Web.

The Internet

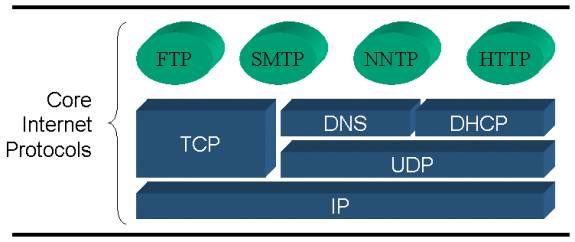

For discussion purposes, I

will use the term Internet to refer to the set of inter-connected networks that

abstract the hardware and networking equipment while exposing a set of

protocols for general-purpose computer communications, primarily TCP/IP.

The Internet infrastructure

and the core protocols have continued to advance over the years. The Internet

will serve as the base communications infrastructure for the World Wide Grid.

The World Wide Web

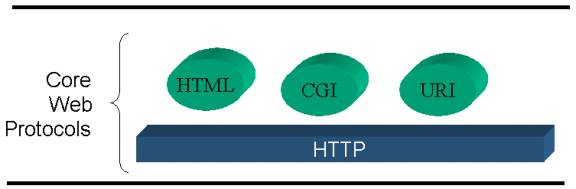

The Web is a set of

protocols and standards that are focused on document transfer (http) and

rendering (html). These core protocols were bound to the Internet protocols.

The focus for the web was to

describe pages that could be linked together and read by people. The documents

were semi-structured and some basic facilities for distributed computing were

built-in (e.g. session ID = cookie).

The Creation of the Grid

The Web was a fine facility

for presenting HTML pages to people and having them respond. However, the core

facilities of the web were not adequate for robust computer-to-computer communications.

The need for computers to talk to each other has been a long-standing problem.

Examples include, getting a companies order management system to talk to the

accounting system or getting two different companies to get their systems to

talk to each other.

In this vein, computer

scientists began combining concepts from the web, like structured documents and

open standards, ubiquitously deployed along with traditional distributed

computing concepts (like RPC, DCE and CORBA). What evolved was a series of

steps that would some day link software systems around the globe in a uniform

manner.

The creation of a uniform

grid that could accommodate a diverse set of business needs would be no minor

task. The effort would require the creation of a significant number of

standards, protocols and services to facilitate communications. It would

require computer scientists, software engineers, document specialists and

distributed computing experts to all pitch in. Today, we stand at a point where

the Grid is far from complete, but the path and the form are beginning to take

shape. Half way through 2003, we have

two major steps all but complete; a set of protocols known as web services have

been standardized and are now entering a period of ubiquitous deployment. These

standards will continue to be revised over the coming years, but the current

state will enable the primary Grid feature set.

Phase 1: Web Services

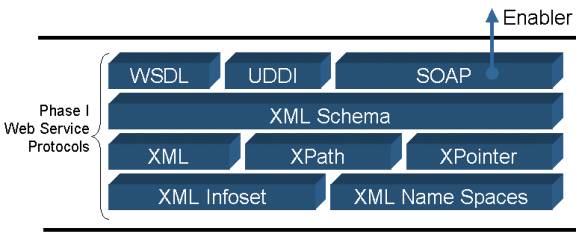

In an effort to overcome the

inabilities of the Web protocols to enable systems communications, a new set of

standards and protocols were created. The foundation for the standards was XML.

This language enabled a standard way to describe data and behavior that could

easily move between languages, platforms and hardware. Soon after the release

of XML the technical community released a series of new protocols that enabled

a more sophisticated method for describing information (XML Infoset &

Schema) as well as devices for accessing and manipulating the data (XPath &

XPointer).

The XML family set the stage

for a primitive mechanism to perform distributed systems calls. It was

determined that XML could be used to describe and document the interface of a

software system (WSDL). Then, other systems that wanted to call the service

would merely look up the interface and the interface would describe the calling

semantics. A universal directory was created to house the interfaces to the

services (UDDI). Lastly, a format for sending the messages was created (SOAP).

It was determined that the system-to-system messaging structure should also

leverage XML and initial rules for leveraging current Internet and Web

standards were created (SOAP bindings for HTTP, SMTP).

The first phase of web

service protocols were effective at facilitating basic messaging between

systems. However, they failed to gain wide scale adoption because of their lack

of mission-critical features. This led

to modifications to SOAP and WSDL, allowing for a more extensible design.

Phase 2: Web Services

Many of the Phase I Web

Service standards were solidified by W3C, the non-profit organization most

widely known for championing the World Wide Web standards. The base technology

XML was well within their reach since it had the same basic roots as HTML (SGML).

However, the problem of creating a mission critical distributed computing

infrastructure required different expertise. Two players, IBM and Microsoft,

largely championed phase 2 of the web service standards with many others

lending technology, patents, advice and experience along the way.

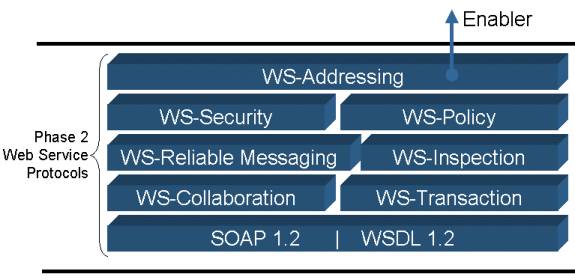

The problem that was being

addressed was how to create a secure, reliable, transactional messaging

infrastructure that could be used by businesses around the globe. The solution

was to break the huge problem down into a set of smaller “concerns” or

problems. Each problem was granted a new specification that remedied a piece of

the problem. A series of new protocols were created, using a nomenclature that

prefixed “WS-“ in front of the problem that it solved.

This new set of protocols2

extended the phase 1 protocols, adding the missing mission-critical features.

Microsoft referred to the second set of protocols as “GXA” or their “Global XML

Architecture”, while IBM called it all “web services”. Regardless of the name,

a new infrastructure for system-to-system communications was born and would

serve as the foundation for the Grid.

One of the new features was

an addressing scheme that would sit on top of the IP addressing scheme used by

the web. This would enable a new level of application networking and begin

modifying how people viewed the protocols. It no longer was just a distributed

computing environment; it was a whole new network.

The Service Network

With the release of a new

network addressing scheme and the ubiquitous deployment of the web services

stack – a new network was born. Like in the Internet, a series of participants

would have to play a role to make the network perform. Routers, gateways,

directories, proxies – they are all needed – and not the kind that speak TCP/IP

– they need to understand the new web service protocols. This network (which I

am calling, “The Service Network”) works above the Internet, yet relies on it

at the most basic level.

The Service Network

facilitates the movement of SOAP messages between applications and between

companies. Like the Internet before it,

the network operations would be performed both at the software level and at the

hardware level. New hardware devices that accelerated the parsing of XML as

well as the routing, sniffing and securing were created.

Ultimately, the Service

Network and the ubiquitous deployment of web service protocols set the stage

for a new breed of software to be created. For the first time in the history of

computing, a new distributed computing paradigm was agreed upon by the all the

major software vendors as well as by standards bodies. This set the stage for a

new producer-consumer-registrar style of computing, known as, “Software as a

Service”.

Software as a Service

The “Software as a Service”

model was a new way of partitioning applications. It enabled best of breed

components to be assembled together based on well-known interfaces and

contracts. Unlike in a previous era, known as Component Based Development

(CBD), the contracts could state service level agreements. A service could

guarantee that it would respond within a certain amount of time, or guarantee

that it would remain “up” or active for a certain percentage of the year.

These agreements, along with

new standards for authenticating, provisioning, metering and billing users

would enable a “pay as you go” revenue model. This model entices software

vendors (ISV’s) because it creates long-term annuities and mitigates issues surrounding

last minute deals that are struck at the end of the fiscal quarters. Enterprise

buyers also are enticed by the service level agreement and at the opportunity

to commoditize certain pieces of software. The use of a standardized interface

to access the service enables the enterprise buyer to shop for a low-cost

provider.

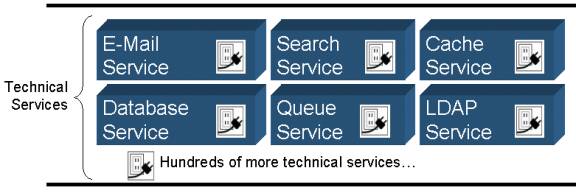

It is anticipated that the

first wave of “Software as a Service” will be around the technical service

providers. These vendors will create web service front-ends to existing

products and in some cases will begin to offer their products through a hosted,

managed offering (Web Service Provider, WSP).

Business Services

As the commercial technology

software vendors retool their applications, we will begin to see the commercial

enterprise vendors take a similar strategy. The large ERP, SFA, CRM &

Supply Chain vendors will all be forced down a path of offering their product

as a set of services. Efforts will be made to redesign the applications around

digitally described business processes. These business processes will be

described via the aggregation of services. At a micro-level, the processes will

incorporate calls to the Service Network, to the Technical Network and to a

variety of services participating in the Business Network.

The enterprises hope to see

a plug & play model of functionality. Unlike the current software model,

which offers pre-bundled packages, the service model has the opportunity of

creating a more flexible approach to combining software. Vendors will likely react with “service

frameworks” which, although will interoperate with the competitors, have

advantages when utilized in a single vendor model.

The custom development that

currently exists inside of large enterprise will likely evolve into a more of a

custom integration model. Here, “Service Integrators” will work with

off-the-shelf vendors to weave together solutions. When pre-packaged solutions

are not available, they will lean on internal “Service Architects” and “Service

Developers” to create new functionality that will be woven into their

respective Grid. And like in the Web,

some individuals will specialize in the applications that are inside the

firewall (Intragrid), while others will focus on externally facing applications

(Intergrid, or WWG).

The Intragrid

In most organizations, the

majority of the software applications are inwardly facing. The employees of the

company (rather than customers, business partners, etc.) use the applications.

The problem facing the Intragrid is in making all of the internal applications

speak to each other. In the past, we called this an “application integration”

problem and it was solved using a category of software known as, “EAI” or

“Enterprise Application Integration”.

EAI solutions enabled

heterogeneous applications to speak by delivering custom adaptors for each

application. Thus, there were adaptors for SAP, JDBC, etc. In the Intragrid, the effort shifts; instead

of writing thousand of custom adapters, the software applications (like SAP)

are asked to expose themselves as web services. In essence, the burden to speak

in a “ubiquitous tongue” (i.e., web services) is placed on the application,

rather than demanding an interpreter (i.e., EAI). This shift is what some call,

“The Service Oriented Enterprise”.

The need for centralized

application integration does not go away. Rather, the form changes. Service

oriented integration is often called, “orchestration” or “choreography”. A new breed of integration tools facilitate

the weaving together of services to form chains that often mimic the business

processes found in the organization.

The Intragrid will also find

itself dependent on service provided over the Intergrid. Software as a Service

will enable a fine grained approach to delivering software to corporate

customers utilizing a variety of payment models. The Intragrid will likely find

itself becoming very dependent on resources that are hosted outside of the

company’s walls, but are managed under service level agreements.

Employees will likely

continue to use applications provided in an ASP (Application Service Provider)

model. However, with the advent of the Intergrid, customers will demand access

to their data and business rules via secured web service technologies. In

addition, more emphasis will likely be placed on making ASP’s communicate

amongst them selves using the same technology.

The end result will be

shortened integration cycles. However, it should be noted that the pure amount

of new functionality that can be woven together would be substantial.

Organizations may find themselves tempted to create very large, and dependent

service oriented applications merely because they can, rather than based on

actual need.

The Intergrid (The World Wide Grid)

Although the visage of the

Grid is unknown, we can draw upon parallels from the web to forecast the

future.

The Super UBR

First, a robust mechanism to

find services will be needed. The initial incarnation of a service directory

has already been rolled out in the form of the UBR (UDDI Business Registry). We

can expect additional mechanisms as well as new facilities for crawling the

Grid as well as sophisticated search engines.

The WSP

The Web brought about the

concept of using a third party to host and/or manage the software on your

behalf (ISP, ASP and MSP). I anticipate that the Grid will create the WSP (Web

Service Provider). This is a hybrid of the ASP and the MSP. Here, managed web

services will be hosted, but additional infrastructure will be made available

to the services, including security, resilience, scalability, billing, metering

and caching.

Service Market Place

Like the web, we will likely

see focused market places that sell services.

The markets will be able to easily offer “try-before-you-buy” options

due to the decoupled nature of software as a service. Snap-together software

along with pay-as-you-go pricing models will likely increase the need for

service super markets.

Personal Services

Although the Grid is mostly

focused on inter-application, inter-business requirements, consumers will also

find it of value. I anticipate that consumer friendly orchestration tools will

allow home users to create scripts that make their life easier. This is

reminiscent of the previous Microsoft Hailstorm effort (AKA, .Net My Services).

Virtual Private Service Networks

The Grid will be faced with

significant security threats. I anticipate that the initial

business-to-business incarnations of the Grid will be run on private networks.

As the WS-Security stack gets ironed out, more companies will begin to create

in “Virtual Private Service Networks”, which will facilitate dynamic

participation.

Grid Attributes

There are several factors

that are coming together that are enabling a next generation network.

Protocol Enabled

At the heart of the Grid is

the use of standardized protocols. These protocols identify many of the

concerns that faced distributed programmers and reduce them to a commodity.

Ubiquitously Deployed

The protocols (and non-protocol

standards) are of little value if all of the participants in the conversation

don’t understand the ones that they need to understand. The primary Grid

protocols must be ubiquitously deployed and many other enhancements will also

need to find their way into computing devices.

Extensible Design

After rolling out a protocol

stack to millions of computing devices, it becomes a challenge to roll out new

extensions. Thus, the initial design must be extensible and facilitate adding

features as you go. At first glance, the Grid appears to have done this

successfully through extensible designs in SOAP, WSDL and WS-Policy.

Open-Enough

Standards bodies govern the

creation of the specifications that form the basis of the Grid and a democratic

process of enhancing the specifications is utilized. However, many will note

that the strong influence that Microsoft and IBM have induced has created a set

of “open enough” standards.

Self-Forming Networks

Like the Web, the Grid is a

self-forming network. This means that anyone that agrees to the protocols of

the Grid can create services and become a participant. I believe this organic

growth model is essential to survival.

Self Describing Structured Documents

One of the primary

attributes of the Grid is the use of XML and the ability to create meta-data

structures to describe information. This is core to the Grid.

Service Oriented, Message Based and Process

Driven

The Grid is a designed as a

set of services that pass messages to each other according to business processes.

This combination of concepts is a significant advancement over previous tightly

coupled efforts.

Adoption

It is difficult to predict

the adoption rate of the Grid. Although it will offer significant value, it is

a complex structure that will likely take several unanticipated enhancements

before the value becomes compelling. However, like all networks it is likely

that it will be subject to a modified version of Metcalfe’s Law. The original

law states that the value of a network proportional to the square of the number

of people using it. However, in the Grid, the users are often software systems.

The value of the Grid will

increase as more software is turned into a service. Initial adoption challenges

will be based on the lack of services available. After more services become

available the obstacle will likely become locating the best service provider

(rather than building or exposing the service).

It is also apparent that

major software vendors will push their customer base to adopt this model.

Vendors like Microsoft have already made major modifications to software like

Microsoft Office to make it Grid-ready. End user demand will likely generate

enough interest to make corporate CIO’s take notice and begin rolling out

Intergrid and Intragrid strategies.

It is premature to forecast

adoption rates. However, based on historical deployment rates, I will attempt

to forecast Grid components focus periods:

2000 – 2002: Focus is on

Phase I Web Services

2003 – 2004: Focus is on

Phase II Web Services

2004 – 2005: Focus is on the

Enterprise creating a Service Network

2004 – 2007: Focus is on

ISV’s redesigning to facilitate “Software as a Service”

2005 – 2010: Focus is on

Enterprises adopting Technical and Business Services

Although these dates may

seem aggressive, I feel they are realistic based on one single premise:

Microsoft and IBM have been working in an unparalleled, concentrated effort to

create the Grid infrastructure in order to offer a compelling reason for

their customer base to spend money. The successful creation of the Grid will

create a compelling reason for the enterprise customers to revamp their

software in order to make it more agile, cost-competitive and strategically

aligned with organizational objectives.

Note: The adoption rate is largely based on the universal,

or ubiquitous deployment of the protocols. The timelines may be advanced if

groups like Microsoft, IBM and leading Linux vendors choose to ship their

operating systems with the Grid stack built-in.

Future Challenges

The Grid is not without it’s

challenges. The creation of an information grid that spans the globe, crosses

businesses, industries and also changes the basic programming model will face

hurdles at every turn.

- The first item worth noting is that the Grid is

complicated. Unlike the web, which has a small handful of protocols, the

Grid is composed of scores of dependent protocols. Many of these are new

to the world and have not been time tested. Also worth noting is that

these standards are being designed up-front, rather than a more “organic”

method of creating standards on an as-needed basis.

- The Grid also promotes a design concept known

as, “loosely coupled”. Loose coupling is a philosophy of building items

that do not have heavy dependencies on each other. Loosely coupled systems

will usually trade increase agility over increased performance. Many

software developers today tend to put performance over agility. It is

likely that initial “software as a service” designs will be inflexible due

to a lack of experienced service architects and best practices.

- The Grid will require a new breed of

infrastructure. Unfortunately, venture capitol investors find themselves

gun shy after the dot-com bubble bursting. Additionally, many software

companies have been facing the worse slump in recent history. I.T.

spending has plummeted, leaving very little money for I.S.V.’s to spend on

research & development efforts. This has left the fate of the industry

primarily in the hands of two cash-healthy companies, Microsoft and IBM.

- Many ISV’s see the IBM and Microsoft effort as a

form of, “Web Service Duopoly Domination”. Their ability to drive

standards and ubiquitous deployments may lead to a standardized Grid, but

one that lacks innovation.

- The

business & revenue models associated with Software as a Service have

not been ironed out. It is likely that this new paradigm will require

several iterations before a healthy balance between provider and buyer are

reached.

Notes

1 I use the terms, “Grid”, “World Wide Grid”,

“InterGrid” and “IntraGrid” as placeholders for concepts that have not formally

or informally reached consensus. These terms are utilized for the purpose of

conveying that the software participants that choose to communicate via the web

service protocols can be viewed as a network, and thus should be considered

networking technologies.

·

The term “Grid” should

not be confused with efforts like Globus or other efforts that aim to

create a high-performance grid of computers for scientific applications. These efforts

typically focus on massively parallel computing as the top priority and on

distributed computing ubiquity as a lower priority. The Grid as I define it,

places distributed computing ubiquity at the forefront. At some point, I

anticipate that Globus and the World Wide Grid will have a significant

amount of overlap.

·

The Grid concept should

not be confused with IBM’s “On-Demand” effort. This program is designed to

automatically provision computing resources (disk, memory, CPU and network) on

a priority basis. This enables a more efficient use of computing resources,

including smoothing peek period spikes. It should be noted that the

partitioning that occurs in service oriented computing ultimately enables a

more fine-grained provisioning of applications; ultimately having the potential

to provide further efficiency increases.

2 WS-Routing was

intentionally left out of the set. At the time of this writing, IBM is not

backing the standard and it is unclear if it will participate as a core member

of the protocol set. Many argue that a priori identification of intermediaries

in unnecessary and harmful.

·

The protocol bundle

includes many other participants like WS-PolicyAttachment, WS-PolicyAssertion,

WS-Trust, WS-SecureConversation, etc. These specifications were not mentioned

purely for the sake of brevity.

·

Although the diagram

depicts a layered protocol stack, the relationship between the protocols is not

layered. In most cases the relationship is hierarchical, or enveloping in

nature.

About the Author

Jeff Schneider is the CEO of

Momentum Software Inc., a consultancy specializing in the Grid and related

technologies.