WS-Mex is now published at the Microsoft site.

The spec is 975 lines long, but I can summarize it for you.

One verb: [get] and three nouns: [schema, wsdl, policy]

Naturally, this took 6 type definitions, 11 messages and 3 operations.

Suddenly: ?WSDL, ?POLICY, ?SCHEMA doesn't look so bad...

They hardcoded in three types of metadata into the spec - isn't there something IRONIC about that???

Oh, the editors are:

Francisco Curbera (Editor), IBM

Jeffrey Schlimmer (Editor), Microsoft

Delivering Business Services through modern practices and technologies. -- Cloud, DevOps and As-a-Service.

Tuesday, March 30, 2004

Wednesday, March 24, 2004

Verb Only (when the verb acts the same on all subjects - e.g., save (x), save(y)

Verb Noun (when the verb acts differently (different logic) on each subject processPurchaseOrder(), processSalesCommission()

Qualified Verb (Bidirectionally Link(x,y)) versus link(x,y); used to qualify the intent of the verb (changes functional requirement, unlike most adverbs which only apply to NFR)

Verb Mechanism (when the logic is changed more by the mechanism than by the subject)

Verb Noun (when the verb acts differently (different logic) on each subject processPurchaseOrder(), processSalesCommission()

Qualified Verb (Bidirectionally Link(x,y)) versus link(x,y); used to qualify the intent of the verb (changes functional requirement, unlike most adverbs which only apply to NFR)

Verb Mechanism (when the logic is changed more by the mechanism than by the subject)

Verb Mechanism

Many of the web services that are created take on the form of "VerbMechanism"; that is, they specify a verb and the mechanism in which to accomplish the task, then they usually require the 'who' and the 'what' to be passed in:

sendEmail ( 'to bob', 'the status report')

sendFax( 'to bob', 'the status report')

sendInstantMessage( 'to bob', 'the status report')

Here, the mechanism is 'Email', 'Fax' and 'InstantMessage".

Another way of writing this is by using 'via':

"send the status report to bob via email"

When specifying a mechanism, we will likely have preconditions on the mechanism. "via email" requires four pieces of information (email server, email credentials, email destination, email payload); The data related preconditions can be expressed in a declarative manner on each mechanism. Currently, we push these data nuggets into the signature of the VerbMechanism. But usually there is an overlap between the verb and the mechanism (it was 'send' that required two of the pieces of information (destination, payload); the mechanism required the other two pieces (server , credentials).

A mechanism is a way to overload an operation. The verb I'm overloading is 'send' and the mechanism is 'Email". When we overload in object oriented systems, we usually just grow the signature: send(destination, payload); send(server, credentials, destination, payload); I'm not a fan of this... I prefer the declarative OCL like approach but with an 'implied signature' based on the overloading with a mechanism.

Another issue to consider is the 'invisible mechanism' or 'implicit mechanism'. Consider:

savePurchaseOrder(...);

Here, the designer intentionally hides the implementation mechanism from the user of the operation.

Save Purchase Order via Relational Database

Save Purchase Order via Flat File

Now, I agree that it is a good practice to not burden the user of a service with mechanism. However, I'm not sure that it is always a good idea to hide it from them (burden them versus inform them).

Again we see that each mechanism will require a set of preconditions:

"via Relational Database" needs (a JDBC Driver, a connection string, credentials, etc.)

I'll save "Reliably Save the Purchase Order via Relational Database" for another morning.

sendEmail ( 'to bob', 'the status report')

sendFax( 'to bob', 'the status report')

sendInstantMessage( 'to bob', 'the status report')

Here, the mechanism is 'Email', 'Fax' and 'InstantMessage".

Another way of writing this is by using 'via':

"send the status report to bob via email"

When specifying a mechanism, we will likely have preconditions on the mechanism. "via email" requires four pieces of information (email server, email credentials, email destination, email payload); The data related preconditions can be expressed in a declarative manner on each mechanism. Currently, we push these data nuggets into the signature of the VerbMechanism. But usually there is an overlap between the verb and the mechanism (it was 'send' that required two of the pieces of information (destination, payload); the mechanism required the other two pieces (server , credentials).

A mechanism is a way to overload an operation. The verb I'm overloading is 'send' and the mechanism is 'Email". When we overload in object oriented systems, we usually just grow the signature: send(destination, payload); send(server, credentials, destination, payload); I'm not a fan of this... I prefer the declarative OCL like approach but with an 'implied signature' based on the overloading with a mechanism.

Another issue to consider is the 'invisible mechanism' or 'implicit mechanism'. Consider:

savePurchaseOrder(...);

Here, the designer intentionally hides the implementation mechanism from the user of the operation.

Save Purchase Order via Relational Database

Save Purchase Order via Flat File

Now, I agree that it is a good practice to not burden the user of a service with mechanism. However, I'm not sure that it is always a good idea to hide it from them (burden them versus inform them).

Again we see that each mechanism will require a set of preconditions:

"via Relational Database" needs (a JDBC Driver, a connection string, credentials, etc.)

I'll save "Reliably Save the Purchase Order via Relational Database" for another morning.

Saturday, March 20, 2004

The Service Network

Today, there are largely three camps when it comes to web service architectures: The SOA guys, The Bus guys and The Protocol Network guys.

The SOA guys

The SOA guys were the original 'web service' people. They viewed the new paradigm as a ubiquitous service based system that would utilize the architectural pattern known as SOA (Producer, Consumer and Directory). The SOA guys are the people that build UDDI servers and SOAP platforms. They have taken the best of the CORBA world, learned some lessons and reapplied it to a new set of protocols that are accepted by MS and IBM. The SOA guys rarely mention a 'network topology', but their implicit topology is point-to-point (my consumer directly calls your producer).

The Bus guys

The Bus guys are the people who believe in messaging. Ultimately, messages and services go hand in hand. Some people put more emphasis on the service (SOA guys), while other put more emphasis on the message (Bus guys). The Bus guys also love asynchronous communication - thus, they loves queues or any other store and forward mechanism. Unfortunately, most of the Bus guys come from the JMS world and largely their stuff doesn't interoperate (think ESB). The Bus people usually think that the web services network topology is hub and spoke.

The Protocol Network guys

The Protocol Network guys are the people who treat web services more like networking protocols. These guys put emphasis on two things: wire protocols and policies. They see everything as a policy on a protocol. These guys love specs like ws-addressing, ws-discovery and ws-policy. The Service Network guys see the network topology as being adaptive based on the state of the network. They rely on routers, load balancers and other devices that have knowledge about the running services to make informed decisions on the fly.

The reality of is that most large companies will need a hybrid of all three. They will embrace a standard SOA triangular pattern, but letting a Protocol Network make routing decisions at run-time, with the messages often ending up in queue. I continue to consult to my clients about the convergence of the three paradigms. This convergence is what I call the Service Network.

The Service Network is a message-based, service-based and protocol-based computing model.

- It leverages the SOA model to decouple producers and consumers and to provide lookup capabilities for self-describing services.

- It leverages the Bus model to provide asynchronous communications for long-running processes.

- It leverages the Protocol Network to provide runtime decision making about locating and executing a service on the network based on the service network conditions.

Another way of looking at these models is:

- SOA decouples software units (consumer and producer)

- Bus decouples software in time (synchronous = time-coupling)

- Protocol Network decouples software from hardware (run a service on some machine)

The goal of the service network is to provide all three forms of decoupling.

The SOA guys

The SOA guys were the original 'web service' people. They viewed the new paradigm as a ubiquitous service based system that would utilize the architectural pattern known as SOA (Producer, Consumer and Directory). The SOA guys are the people that build UDDI servers and SOAP platforms. They have taken the best of the CORBA world, learned some lessons and reapplied it to a new set of protocols that are accepted by MS and IBM. The SOA guys rarely mention a 'network topology', but their implicit topology is point-to-point (my consumer directly calls your producer).

The Bus guys

The Bus guys are the people who believe in messaging. Ultimately, messages and services go hand in hand. Some people put more emphasis on the service (SOA guys), while other put more emphasis on the message (Bus guys). The Bus guys also love asynchronous communication - thus, they loves queues or any other store and forward mechanism. Unfortunately, most of the Bus guys come from the JMS world and largely their stuff doesn't interoperate (think ESB). The Bus people usually think that the web services network topology is hub and spoke.

The Protocol Network guys

The Protocol Network guys are the people who treat web services more like networking protocols. These guys put emphasis on two things: wire protocols and policies. They see everything as a policy on a protocol. These guys love specs like ws-addressing, ws-discovery and ws-policy. The Service Network guys see the network topology as being adaptive based on the state of the network. They rely on routers, load balancers and other devices that have knowledge about the running services to make informed decisions on the fly.

The reality of is that most large companies will need a hybrid of all three. They will embrace a standard SOA triangular pattern, but letting a Protocol Network make routing decisions at run-time, with the messages often ending up in queue. I continue to consult to my clients about the convergence of the three paradigms. This convergence is what I call the Service Network.

The Service Network is a message-based, service-based and protocol-based computing model.

- It leverages the SOA model to decouple producers and consumers and to provide lookup capabilities for self-describing services.

- It leverages the Bus model to provide asynchronous communications for long-running processes.

- It leverages the Protocol Network to provide runtime decision making about locating and executing a service on the network based on the service network conditions.

Another way of looking at these models is:

- SOA decouples software units (consumer and producer)

- Bus decouples software in time (synchronous = time-coupling)

- Protocol Network decouples software from hardware (run a service on some machine)

The goal of the service network is to provide all three forms of decoupling.

WhiteHorse and Virtualization (take 2)

Alex Torone the Lead Program Manager for the Microsoft Visual Studio Enterprise Tools Team gave me a gentle kick in the balls regarding my inaccurate posting around WhiteHorse. Here are his clarifications:

What the diagram really represents:

The LSAD (Logical Systems Architecture Diagram) represents "logical run time hosting environments" (hence the name). Each box represents a "logical server type". Specifically, the large blue boxes represent a configuration of IIS, whereas the endpoints on the large blue boxes represents web sites. We model the entire IIS meta base (in this example). So a user could either supply "desired configuration" in the tool, or they could simply point to a "canonical server" that has the "desired configuration" and harvest those settings. Once the settings have been defined in the LSAD model, the user can then define constraints against the application environment. For example: Suppose my datacenter policy for "front end web servers" require web apps to use forms authentication and impersonation. These constraints will be validated against the application designer (we model all of system.web for example) and can be expressed in this logical design. There are also two additional layers in the SDM model (part of DSI refer to links below) which represents the network layer, and device layer that are more in line with your comments and are slated for a much later tools and platform release.

About the Physical DataCenter:

Data Centers host many types of applications. Network infrastructure diagrams (we've all seen them) have physical machines, IP address, Vlans, switches, routers, etc. The LSAD is meant to represent abstractions over the physical data center. We want to represent types of server not physical servers. One box on the LSAD does not necessarily equate to a physical server in the data center. In fact, you can create multiple Logical web servers and place then on one physical server with SQL as an example. When we get to actual deployment releases post Whidbey (please refer to the Dynamic Systems Imitative (DSI) links below), we will then provide a logical to physical mapping. This action will populate all of the deployment parameters with they physical URL's of the web server etc.

In conclusion, the LSAD is about conveying that information which is important to the developer (such as what kinds of services are available to me, what communications pathways are open, what configuration must I adhere to, what are the boundary conditions that I must be aware of, etc) such that we will increase the probability that their design will actually work when it is physically deployed.

The more I dig into the DSI, the more impressed I am. If they can pull it off with design-time integration it will be one heck of a story. Here are some links Alex provided me to reduce my ignorance:

http://www.microsoft.com/windowsserversystem/dsi/default.mspx

http://msdn.microsoft.com/vstudio/productinfo/enterprise/enterpriseroadmap/default.aspx?pull=/library/en-us/dnvsent/html/vsent_soadover.asp

http://msdn.microsoft.com/vstudio/productinfo/enterprise/enterpriseroadmap/whitehorsefaq.aspx

http://microsoft.sitestream.com/PDC2003/TLS/TLS345_files/Default.htm

http://msdn.microsoft.com/msdntv/

What the diagram really represents:

The LSAD (Logical Systems Architecture Diagram) represents "logical run time hosting environments" (hence the name). Each box represents a "logical server type". Specifically, the large blue boxes represent a configuration of IIS, whereas the endpoints on the large blue boxes represents web sites. We model the entire IIS meta base (in this example). So a user could either supply "desired configuration" in the tool, or they could simply point to a "canonical server" that has the "desired configuration" and harvest those settings. Once the settings have been defined in the LSAD model, the user can then define constraints against the application environment. For example: Suppose my datacenter policy for "front end web servers" require web apps to use forms authentication and impersonation. These constraints will be validated against the application designer (we model all of system.web for example) and can be expressed in this logical design. There are also two additional layers in the SDM model (part of DSI refer to links below) which represents the network layer, and device layer that are more in line with your comments and are slated for a much later tools and platform release.

About the Physical DataCenter:

Data Centers host many types of applications. Network infrastructure diagrams (we've all seen them) have physical machines, IP address, Vlans, switches, routers, etc. The LSAD is meant to represent abstractions over the physical data center. We want to represent types of server not physical servers. One box on the LSAD does not necessarily equate to a physical server in the data center. In fact, you can create multiple Logical web servers and place then on one physical server with SQL as an example. When we get to actual deployment releases post Whidbey (please refer to the Dynamic Systems Imitative (DSI) links below), we will then provide a logical to physical mapping. This action will populate all of the deployment parameters with they physical URL's of the web server etc.

In conclusion, the LSAD is about conveying that information which is important to the developer (such as what kinds of services are available to me, what communications pathways are open, what configuration must I adhere to, what are the boundary conditions that I must be aware of, etc) such that we will increase the probability that their design will actually work when it is physically deployed.

The more I dig into the DSI, the more impressed I am. If they can pull it off with design-time integration it will be one heck of a story. Here are some links Alex provided me to reduce my ignorance:

http://www.microsoft.com/windowsserversystem/dsi/default.mspx

http://msdn.microsoft.com/vstudio/productinfo/enterprise/enterpriseroadmap/default.aspx?pull=/library/en-us/dnvsent/html/vsent_soadover.asp

http://msdn.microsoft.com/vstudio/productinfo/enterprise/enterpriseroadmap/whitehorsefaq.aspx

http://microsoft.sitestream.com/PDC2003/TLS/TLS345_files/Default.htm

http://msdn.microsoft.com/msdntv/

Wednesday, March 17, 2004

Book Recommendations

It is tough to find good books on subjects like web services. The people that write on the subjects are usually writing about the current state (which is outdated by the time you buy the book) or a future state (which is usually wrong). Generally speaking, I don't buy books on web services - however, I do buy books on areas of convergence. That said, here are my recommendations:

1. Policy Based Network Management - A book by John Strassner, a Fellow at Cisco and thought leader in DEN, writes on the topic of the declarative network. The book covers basic policy models and then explores the DEN-ng policy model as an example. The book will never mention SOAP, web services or anything at the application level. It is up to the reader to draw analogies between Network Policies and Application Policies. For those that are familiar with the WS-Policy specs, you will find significant overlap between WS-Policy and PBNM (Policy Based Network Management). This book should help you understand the importance of declarative policies and their uses in application-level service networks.

2. Grid Computing - A book by Joshy Joseph and Craig Fellenstein, this book is part of the IBM 'On Demand Series'. The book covers the basics of grids, the merging of grids and web services, OGSA, OGSI and the programming model for the Globus GT3 Toolkit. This is a good book for anyone who needs a crash course in grid services. It is a high-level read - not a reference manual.

1. Policy Based Network Management - A book by John Strassner, a Fellow at Cisco and thought leader in DEN, writes on the topic of the declarative network. The book covers basic policy models and then explores the DEN-ng policy model as an example. The book will never mention SOAP, web services or anything at the application level. It is up to the reader to draw analogies between Network Policies and Application Policies. For those that are familiar with the WS-Policy specs, you will find significant overlap between WS-Policy and PBNM (Policy Based Network Management). This book should help you understand the importance of declarative policies and their uses in application-level service networks.

2. Grid Computing - A book by Joshy Joseph and Craig Fellenstein, this book is part of the IBM 'On Demand Series'. The book covers the basics of grids, the merging of grids and web services, OGSA, OGSI and the programming model for the Globus GT3 Toolkit. This is a good book for anyone who needs a crash course in grid services. It is a high-level read - not a reference manual.

Monday, March 15, 2004

Why the Outsourcing Flap Makes Cents

Rich Miller, who runs my favorite blog, made a posting near to my heart titled, "Why the Outsourcing Flap Makes No Sense" - where, Rich asks what the flap is all about.

The flap is about...well, pissed off people that lost their jobs.

I spoke with two different people today who are old friends. They both worked (past tense) at Sabre. The first was a VP of engineering who was laid off and his job was moved to Poland. This guy was a real "do-er" - the kind of guy that made things happen - he'd cut through the BS and get things done. Does he cost more than someone in Poland? Yes. Sucks to be him. He's now remodeling his kitchen and sending resumes out on Monster.com. He gets it - lower wages for Sabre lead to a more competitive product - higher share prices for share holders and lower prices for consumers. He completely gets it - but he's still pissed off.

The second person I talked with (a project manager) told me that she was told that she had to let her whole team go because they were to be replaced with people in India. She told her manager that she didn't like the idea but went along. Eventually she was told that her job was moving offshore as well but they gave her the option of being manager of 'offshore procurement'. She did it. Then when the procurement was done she was let go. Now she is reading books on how to day-trade. And yes, she is pissed off.

The flap is about people that are great at their job who get let go based purely on cost. They weren't given the opportunity to accept a lower salary - after all, their employer has a mandate to move 25% of product engineering jobs offshore. The flap is about humans who felt disgraced by long-time employers.

Maybe the U.S. installed a minimum wage system too early. Maybe we drove up our own cost of living and that drove up our salaries. It is our own fault. I know - yelling about free markets won't change anything. But if my friends at Sabre and all the other companies that have cut U.S. workers want to bitch - I understand. Flap all you want - because that is all you are going to get.

The flap is about...well, pissed off people that lost their jobs.

I spoke with two different people today who are old friends. They both worked (past tense) at Sabre. The first was a VP of engineering who was laid off and his job was moved to Poland. This guy was a real "do-er" - the kind of guy that made things happen - he'd cut through the BS and get things done. Does he cost more than someone in Poland? Yes. Sucks to be him. He's now remodeling his kitchen and sending resumes out on Monster.com. He gets it - lower wages for Sabre lead to a more competitive product - higher share prices for share holders and lower prices for consumers. He completely gets it - but he's still pissed off.

The second person I talked with (a project manager) told me that she was told that she had to let her whole team go because they were to be replaced with people in India. She told her manager that she didn't like the idea but went along. Eventually she was told that her job was moving offshore as well but they gave her the option of being manager of 'offshore procurement'. She did it. Then when the procurement was done she was let go. Now she is reading books on how to day-trade. And yes, she is pissed off.

The flap is about people that are great at their job who get let go based purely on cost. They weren't given the opportunity to accept a lower salary - after all, their employer has a mandate to move 25% of product engineering jobs offshore. The flap is about humans who felt disgraced by long-time employers.

Maybe the U.S. installed a minimum wage system too early. Maybe we drove up our own cost of living and that drove up our salaries. It is our own fault. I know - yelling about free markets won't change anything. But if my friends at Sabre and all the other companies that have cut U.S. workers want to bitch - I understand. Flap all you want - because that is all you are going to get.

Objects, Services and Verbs

A few years ago, I asked my mentor what the difference was between objects and services. He told me a handful of things, but the one that I really remember was, "objects use a 'noun.verb' notation and services use a 'verb.noun' notation." I asked him why this was a big deal and he told me that in most systems there are less verbs than nouns. His point was that you would end up with less first class citizens in a service oriented world than you would in an object oriented world. Very interesting. He later pointed out that the service citizen would likely treat data as meta-data, making it much more manageable than an object that tries to use polymorphic behavior to apply verbs functionality on nouns.

I've become fascinated with service / operation naming. As an example, the WSDL spec uses, "GetLastTradePrice". Immediately I break it down, "Get" "Price" ... what kind of price? "Trade"... which one? "Last"... or perhaps it is: "Trade.Price"?... Hmmm... How about :

Trade.Price.get().last();

Object, attribute, getter, ordered set operation - an interesting way to break it down. Now, why did the service people run the whole thing together (GetLastTradePrice)? Good question. Don't I end up with a ton of operations if I run them all together? What if... I didn't run combinations together, but instead identified the command components: (getter/setter) x (an objects enumerated attributes) x (potential set operations)? Should an operation name be one big concatenated string where all the potential combinations are combined at design time? SQL sure didn't do it this way - - they went command language for base manipulations - and then went stored procs with fixed names for one-offs (oh, and declarative rules *triggers* for eventing - here the name didn't matter).

Anyway... all I really wanted to do was share some of my favorite verbs:

insert, add, update, set, delete, remove, erase, get, select, fetch, subscribe, publish, receive, listen, send, notify, call, invoke, create, destroy, deallocate, dispose, show, view, hide, close, open, drop, restore, resume, suspend, pause, clear, filter, cache, run, start, execute, stop, allocate, new, advance, go, post, do, find, locate, evaluate, jump, visit, goto, exit, break, spawn, join, split, lock, unlock, process, print, transfer, throw, push and pop.

It's a pretty good list of verbs. There are some people that REST'd after only finding two or three verbs. But they just like wrapping verbs inside of other verbs :-) That's ok - I don't think they hate verbs. They just like a couple verbs a whole bunch!

I like the idea of having an enumerated set of verbs. This isn't the list, though - too much redundancy. Also, some verbs are really just combinations of other verbs. Hmmm... first order verbs. Second order verbs. How many first order verbs does a system need to have a semantic foundation for 'doing' things?

And no, I'm not a verb bigot. I love adverbs too! Nouns suck - although I admire those that have the patience to play in the noun space. Adjectives are cool - only in that they are simple ...

It is my belief that we are *slowly* moving towards a semantic and service oriented world. Operation names that are concatenated strings of verbs, nouns, adjectives and adverbs worry me. There is a better way.

I've become fascinated with service / operation naming. As an example, the WSDL spec uses, "GetLastTradePrice". Immediately I break it down, "Get" "Price" ... what kind of price? "Trade"... which one? "Last"... or perhaps it is: "Trade.Price"?... Hmmm... How about :

Trade.Price.get().last();

Object, attribute, getter, ordered set operation - an interesting way to break it down. Now, why did the service people run the whole thing together (GetLastTradePrice)? Good question. Don't I end up with a ton of operations if I run them all together? What if... I didn't run combinations together, but instead identified the command components: (getter/setter) x (an objects enumerated attributes) x (potential set operations)? Should an operation name be one big concatenated string where all the potential combinations are combined at design time? SQL sure didn't do it this way - - they went command language for base manipulations - and then went stored procs with fixed names for one-offs (oh, and declarative rules *triggers* for eventing - here the name didn't matter).

Anyway... all I really wanted to do was share some of my favorite verbs:

insert, add, update, set, delete, remove, erase, get, select, fetch, subscribe, publish, receive, listen, send, notify, call, invoke, create, destroy, deallocate, dispose, show, view, hide, close, open, drop, restore, resume, suspend, pause, clear, filter, cache, run, start, execute, stop, allocate, new, advance, go, post, do, find, locate, evaluate, jump, visit, goto, exit, break, spawn, join, split, lock, unlock, process, print, transfer, throw, push and pop.

It's a pretty good list of verbs. There are some people that REST'd after only finding two or three verbs. But they just like wrapping verbs inside of other verbs :-) That's ok - I don't think they hate verbs. They just like a couple verbs a whole bunch!

I like the idea of having an enumerated set of verbs. This isn't the list, though - too much redundancy. Also, some verbs are really just combinations of other verbs. Hmmm... first order verbs. Second order verbs. How many first order verbs does a system need to have a semantic foundation for 'doing' things?

And no, I'm not a verb bigot. I love adverbs too! Nouns suck - although I admire those that have the patience to play in the noun space. Adjectives are cool - only in that they are simple ...

It is my belief that we are *slowly* moving towards a semantic and service oriented world. Operation names that are concatenated strings of verbs, nouns, adjectives and adverbs worry me. There is a better way.

Saturday, March 13, 2004

OpenStorm Blog

Ryan, Dave and the crew have kicked off the OpenStorm blog. It will focus more on using the Service Orchestrator and general questions related to BPEL and service composition.

See:

http://www.openstorm.org

I don't see an RSS feed, but I'll ask them to put one up.

See:

http://www.openstorm.org

I don't see an RSS feed, but I'll ask them to put one up.

Thursday, March 11, 2004

AT&T and GrandCentral Partner

See:

http://www.internetnews.com/ent-news/article.php/3324191

"AT&T WebService Connect allows different applications from different sources to communicate without time-consuming custom coding. And because it is XML-based (define), it's not tied to any one operating system or programming language.

Developed over the last year with partner Grand Central Communications, WebService Connect plays into AT&T broader strategy of evolving from a long-distance phone company to a provider of enterprise network services. "

...

"The service will be rolled out gradually in the coming months. It starts at about $34,000 per month, although prices could run higher depending on usage. In terms of its telecom competitors, AT&T believes it is farthest along in offering Web services (define) to its customers. "

Congratulations to GrandCentral!

http://www.internetnews.com/ent-news/article.php/3324191

"AT&T WebService Connect allows different applications from different sources to communicate without time-consuming custom coding. And because it is XML-based (define), it's not tied to any one operating system or programming language.

Developed over the last year with partner Grand Central Communications, WebService Connect plays into AT&T broader strategy of evolving from a long-distance phone company to a provider of enterprise network services. "

...

"The service will be rolled out gradually in the coming months. It starts at about $34,000 per month, although prices could run higher depending on usage. In terms of its telecom competitors, AT&T believes it is farthest along in offering Web services (define) to its customers. "

Congratulations to GrandCentral!

Wednesday, March 10, 2004

Jim Waldo clarifies position

Jim does a great job clarifying his position on standards:

http://www.artima.com/forums/flat.jsp?forum=106&thread=4892

Jim states:

Point one: Just because something is called a standard doesn't make it open; and something that isn't a standard is not, because of that, proprietary.

Point two: A standards body is often a lousy place in which to invent a technology.

Point three: The previous posting was not a veiled (thinly or otherwise) attack on any particular standards group or collection of standards groups.

Point four: If there are multiple groups competing to write a standard for the same thing, it is probably a safe bet that the technology being standardized isn't ready for standardization.

Well done. I think Jim fully understands it. Sucks doesn't it? Oh well.

Now, I'd like to see Jim (the brain behind Jini) take some of his vast knowledge and write a couple new standards... for starters, I think he'd have quite a bit to add to ws-discovery: (ws-leasing, etc.)

So, here is the WS-* formula:

1. Find a concern (think separation of concerns, they usually end in "ility")

2. Find a remedy to the concern.

3. Take the name of the concern and put the letters "WS-" in front of it.

4. Use as much protocol (with XML) to describe the remedy, use wsdl and the other ws-specs to weave a full story.

5. Publish your spec.

6. Wait for either MS or IBM to "expand" the idea, change the name and republish it with a higher degree of separation of concerns and a name that has a striking resemblance to the name you gave it.

7. Bicker to the press about it.

8. Wait approximately 6 months. Feel free to knock out your reference implementation during this period.

9. Watch the MS-IBM version become popular.

10. Terminate your version and publicly support the MS-IBM version. Be happy that a spec exists.

It really is a very simple, straightforward process. Best of luck.

http://www.artima.com/forums/flat.jsp?forum=106&thread=4892

Jim states:

Point one: Just because something is called a standard doesn't make it open; and something that isn't a standard is not, because of that, proprietary.

Point two: A standards body is often a lousy place in which to invent a technology.

Point three: The previous posting was not a veiled (thinly or otherwise) attack on any particular standards group or collection of standards groups.

Point four: If there are multiple groups competing to write a standard for the same thing, it is probably a safe bet that the technology being standardized isn't ready for standardization.

Well done. I think Jim fully understands it. Sucks doesn't it? Oh well.

Now, I'd like to see Jim (the brain behind Jini) take some of his vast knowledge and write a couple new standards... for starters, I think he'd have quite a bit to add to ws-discovery: (ws-leasing, etc.)

So, here is the WS-* formula:

1. Find a concern (think separation of concerns, they usually end in "ility")

2. Find a remedy to the concern.

3. Take the name of the concern and put the letters "WS-" in front of it.

4. Use as much protocol (with XML) to describe the remedy, use wsdl and the other ws-specs to weave a full story.

5. Publish your spec.

6. Wait for either MS or IBM to "expand" the idea, change the name and republish it with a higher degree of separation of concerns and a name that has a striking resemblance to the name you gave it.

7. Bicker to the press about it.

8. Wait approximately 6 months. Feel free to knock out your reference implementation during this period.

9. Watch the MS-IBM version become popular.

10. Terminate your version and publicly support the MS-IBM version. Be happy that a spec exists.

It really is a very simple, straightforward process. Best of luck.

Tuesday, March 09, 2004

Supply Chain Orchestration: RFID meets BPEL

Last week I made a visit to Boston to meet the people at Connecterra. These guys are in the "RFID middleware" space. This category is often called a 'savant' - although the industry seems to be moving beyond this term.

It was absolutely fascinating to see RFID signals get picked up by the readers, be sent to specialized RFID middleware where the signals were aggregated, filtered and eventually turned into web service (soap) calls. These calls could then could be consumed by a BPEL engine for processing. Immediately, the opportunities for "supply chain orchestration" are illuminated.

I've been working on a white paper called, "Supply Chain Orchestration" with Bob Betts for the last couple of weeks. The topic is huge - the impact is significant. We should have the paper done in a couple of weeks - I'll post a note when it is available.

It was absolutely fascinating to see RFID signals get picked up by the readers, be sent to specialized RFID middleware where the signals were aggregated, filtered and eventually turned into web service (soap) calls. These calls could then could be consumed by a BPEL engine for processing. Immediately, the opportunities for "supply chain orchestration" are illuminated.

I've been working on a white paper called, "Supply Chain Orchestration" with Bob Betts for the last couple of weeks. The topic is huge - the impact is significant. We should have the paper done in a couple of weeks - I'll post a note when it is available.

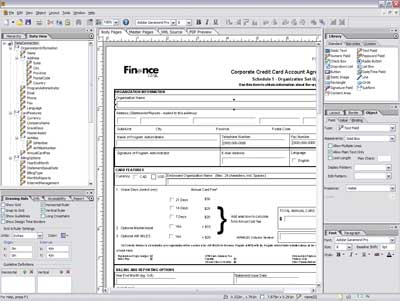

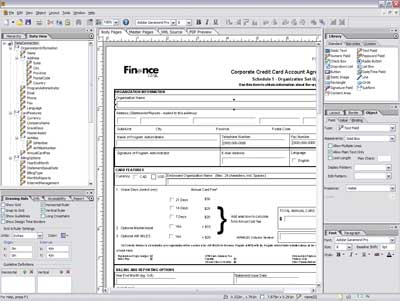

Adobe launches beta of XML/PDF Form Design Software

See:

Adobe Launches Public Beta of New XML/PDF Form Design Software

"Developers can easily integrate form data with core enterprise systems via XML, OLEDB and web services. Additionally, Adobe Designer allows users to design forms that can be used with digital signature technologies for facilitating secure electronic transactions."

Very exciting!

Adobe Launches Public Beta of New XML/PDF Form Design Software

"Developers can easily integrate form data with core enterprise systems via XML, OLEDB and web services. Additionally, Adobe Designer allows users to design forms that can be used with digital signature technologies for facilitating secure electronic transactions."

Very exciting!

WS-Discovery and Jini

News.com and Ron Schmelzer have once again teamed up to inform the public on web service specifications:

Ws-disc and Jini have a discovery component and Jini is a 'java' only thing. A couple other things worth noting:

1. Sun made a huge mistake by not bundling Jini with the J2EE stack early - this killed Jini - it was considered a 'device only' api.

2. Jini is API not a protocol - they later rewrote this functionality as a protocol for Jxta

3. Jini bundled remedies to concerns (leasing, discovery, proxy, service matching and tuple space) in a single spec.

My belief is that ws-discovery will catch on as long as people don't say, "The idea is very much the same as Jini". As cool as Jini was, it was doomed by Sun. If you want to describe ws-discovery, say "it's a multicast framework for dynamically finding resources on a net (wan, lan, scatternet, piconet, etc.) without knowing the address of any resources ahead of time."

WS-Discovery isn't bundling a ton of things together - it is a lightweight protocol for finding stuff across a variety of networks. It isn't based on a single language and has good support. It can make it - as long as we don't accidentally kill it in the cradle.

Ws-disc and Jini have a discovery component and Jini is a 'java' only thing. A couple other things worth noting:

1. Sun made a huge mistake by not bundling Jini with the J2EE stack early - this killed Jini - it was considered a 'device only' api.

2. Jini is API not a protocol - they later rewrote this functionality as a protocol for Jxta

3. Jini bundled remedies to concerns (leasing, discovery, proxy, service matching and tuple space) in a single spec.

My belief is that ws-discovery will catch on as long as people don't say, "The idea is very much the same as Jini". As cool as Jini was, it was doomed by Sun. If you want to describe ws-discovery, say "it's a multicast framework for dynamically finding resources on a net (wan, lan, scatternet, piconet, etc.) without knowing the address of any resources ahead of time."

WS-Discovery isn't bundling a ton of things together - it is a lightweight protocol for finding stuff across a variety of networks. It isn't based on a single language and has good support. It can make it - as long as we don't accidentally kill it in the cradle.

Thursday, March 04, 2004

The Web Service Dial Tone

Web Services are failing and I know why.

Most people I talk to think the lack of adoption is for one of two reasons:

1. The huge WS-* stack is too complicated and it reminds them of CORBA.

2. We haven't found a killer app for ws.

These are both interesting - but in my opinion, they are not the real reason why ws are failing. I firmly believe the answer is very simple; we haven't created a web services dial tone. When I plug my laptop into a network, it immediately sends a broadcast message to the network. Certain devices like routers, firewalls, gateways, etc. respond to the inquiry and pass on some information about their capability and service offerings. This transparent conversation creates a 'network dialtone' - it enables a device to plug into a network, discover the services and converse with them. This capability is at the heart of our TCP networks, but has not been realized in our 'web service networks'.

In order to create a ws-dialtone we need only a handful of capabilities.

1. Consumer-side applications need the ability to send a broadcast message to a network (UDP for web services).

2. Producer-side applications need to be able to listen to the broadcast and respond. They also need the capability to broadcast their availability.

3. UDP style broadcasts are limited to a sub-net. Thus, sub-net routing (via a soap router) is critical.

Much of this functionality is available via the WS-Discovery specification. However, a large number of people in the ws community view the aforementioned protocol as a tool to be used strictly in ad-hoc networks or for consumer hardware devices. And although this is a subset of the target audience, it has broader applicability in the enterprise environment.

As we continue our movement towards contract based development we will begin to see more emphasis on standardizing the contract. Today, our standardized contracts come in the form a platform like J2EE (technical contracts) or from industry working groups (business contracts). The combination of the standardized contract, the discoverable implementation and late binding will introduce a computing model that enables a service oriented system to automatically find producers for a predetermined piece of functionality and to bind to it. Imagine installing a workflow system and immediately after installation, the server found all of the other dependent services (LDAP, single sign-on, etc.) and registered the bindings to those services. This is the vision of a service oriented enterprise - it focuses on the simplified integration of systems by using ubiquitous protocols, standardized contracts and late binding on a dial tone network.

Most people I talk to think the lack of adoption is for one of two reasons:

1. The huge WS-* stack is too complicated and it reminds them of CORBA.

2. We haven't found a killer app for ws.

These are both interesting - but in my opinion, they are not the real reason why ws are failing. I firmly believe the answer is very simple; we haven't created a web services dial tone. When I plug my laptop into a network, it immediately sends a broadcast message to the network. Certain devices like routers, firewalls, gateways, etc. respond to the inquiry and pass on some information about their capability and service offerings. This transparent conversation creates a 'network dialtone' - it enables a device to plug into a network, discover the services and converse with them. This capability is at the heart of our TCP networks, but has not been realized in our 'web service networks'.

In order to create a ws-dialtone we need only a handful of capabilities.

1. Consumer-side applications need the ability to send a broadcast message to a network (UDP for web services).

2. Producer-side applications need to be able to listen to the broadcast and respond. They also need the capability to broadcast their availability.

3. UDP style broadcasts are limited to a sub-net. Thus, sub-net routing (via a soap router) is critical.

Much of this functionality is available via the WS-Discovery specification. However, a large number of people in the ws community view the aforementioned protocol as a tool to be used strictly in ad-hoc networks or for consumer hardware devices. And although this is a subset of the target audience, it has broader applicability in the enterprise environment.

As we continue our movement towards contract based development we will begin to see more emphasis on standardizing the contract. Today, our standardized contracts come in the form a platform like J2EE (technical contracts) or from industry working groups (business contracts). The combination of the standardized contract, the discoverable implementation and late binding will introduce a computing model that enables a service oriented system to automatically find producers for a predetermined piece of functionality and to bind to it. Imagine installing a workflow system and immediately after installation, the server found all of the other dependent services (LDAP, single sign-on, etc.) and registered the bindings to those services. This is the vision of a service oriented enterprise - it focuses on the simplified integration of systems by using ubiquitous protocols, standardized contracts and late binding on a dial tone network.

Tuesday, March 02, 2004

Whitehorse & Virtualization

Microsoft has been touting a next generation designer for creating services and then facilitating the deployment of the services. As Microsoft puts it:

"When creating mission-critical software, application architects often find themselves communicating with their counterparts who manage data center operations. In the process of delivering a final solution, the application's logical design is often found to be at odds with the actual capabilities of the deployment environment. Typically, this communication breakdown results in lost productivity as architects and operations managers reconcile an application's capabilities with a data center's realities. In Visual Studio Whidbey, Microsoft will mitigate these differences by offering a logical infrastructure designer (Figure 19) that will enable operations managers to specify their logical infrastructure and architects to verify that their application will work within the specified deployment constraints."

The environment allows you to drag a service description to a physical node and drop it on the node to signify deployment.

At first this seemed like a great idea, but after spending some time with the IBM grid team they quickly reminded me that "services belong to the network, not a predefined physical node". Thus, hardcoding service locations to a physical node kills the benefit of virtualization. Well - I agree - you want to drag your service to a "service network" and there should be various sub-nets that are partitioned based on resources and capability.

But, I must admit... the Whitehorse demo sure looks cool... It is very "Microsoft".

"When creating mission-critical software, application architects often find themselves communicating with their counterparts who manage data center operations. In the process of delivering a final solution, the application's logical design is often found to be at odds with the actual capabilities of the deployment environment. Typically, this communication breakdown results in lost productivity as architects and operations managers reconcile an application's capabilities with a data center's realities. In Visual Studio Whidbey, Microsoft will mitigate these differences by offering a logical infrastructure designer (Figure 19) that will enable operations managers to specify their logical infrastructure and architects to verify that their application will work within the specified deployment constraints."

The environment allows you to drag a service description to a physical node and drop it on the node to signify deployment.

At first this seemed like a great idea, but after spending some time with the IBM grid team they quickly reminded me that "services belong to the network, not a predefined physical node". Thus, hardcoding service locations to a physical node kills the benefit of virtualization. Well - I agree - you want to drag your service to a "service network" and there should be various sub-nets that are partitioned based on resources and capability.

But, I must admit... the Whitehorse demo sure looks cool... It is very "Microsoft".

Subscribe to:

Comments (Atom)