From the RFID Journal, see:

http://www.rfidjournal.com/article/view/779/1/1/

Delivering Business Services through modern practices and technologies. -- Cloud, DevOps and As-a-Service.

Friday, January 30, 2004

Process Contracts

The concept of 'design by contract' was made popular in the object oriented world - or perhaps more precisely, in the component-oriented world. Much of the work that went into this study was centered around making a single module adhere to a contract and adhere in a consistent manner. When the Java language was introduced many people bumped into the 'interface' for the first time. This was the vehicle that guaranteed 'what' an object could do, but not 'how' it would be done.

This concept was extended with WSDL. The extensions included: defining the interface in a language neutral manner, allowing for multiple bindings between interface and implementation, allowing for a more message-centric design of parameters / arguments, strong support for remote calls and declaration of quality attributes as declarative policies (reliability, integrity, etc.)

In the process world, we continue to leverage the 'design by contract' mentality. Languages like BPEL expose themselves to the outside world through WSDL. This means that every process IS A service with a contract. In addition, BPEL makes all external calls VIA web services. Thus, all calls are first described via the contract.

As we continue to find best practices in designing BPEL code, we have tested a variety of means to get from concept to delivery. The one that seems to be getting the most ground is what we are calling 'process contracts'. Here, the analyst takes on a 'RAD' view of the process. The goal isn't to get all the detail right on the first pass, but rather to quickly identify all of the service calls that need to be made to compose the process. You can think of this almost like the old CRC exercises (Classes, Responsiblity, Collaboration) - except replace 'classes' with 'service calls'.

One thing that is becoming apparent is that people want to use BPEL in different ways. I usually put the usage scenarios into one of three different buckets: B2B, A2A and composite applications. In the B2B and the A2A scenario, it is common for the endpoints to already exist, but the contract to the service (type, message, operations) still needs to be created. In the 'composite apps' space, it is common for neither an interface nor an implementation to exist. In any scenario, the designer finds that they quickly need to begin creating the interfaces or contracts.

We are finding that most people are comfortable building out a complete process by 'stubbing' out the process. This involves identifying all of the major steps (service calls) and the flow/logic between the steps. At this point, the designer isn't going into any detail about the step, just putting boundaries around 'what it does'. The designer then goes back and starts to add detail around the contract of each call. This involves detailing out the WSDL interface (not binding and service). Here the designer begins to look at using common messages and types for consistent service calls. As the designer prepares the calls for invocation, he/she will usually begin to identify if the scope of the call is correct. This is a byproduct of identifying the 'fattiness' of the message, the 'chattiness' of the calls, etc.

All of this is happening up front - meaning it is happening before we begin to 'program in the small' via your favorite language to program services: Java, C#, etc. Upon completion of the process contract, you will have identified:

1. The interface to the process itself

2. The division of labor between the services

3. The interface for each service called

4. Verification that the data needed to call each service is available to the service (var scopes)

It is at this point where you can begin working with your service implementer (java guy, etc.). Here is how the conversation goes:

Jeff: "Hey Bob, I just finished my process contract for the new Fulfill Order process."

Bob: "Cool. How many service calls?"

Jeff: "Well, there were a total of 8 calls. 3 of them were canned and I will be sucking them out of UDDI, but 5 of them are new."

Bob: "Did you stub out the 5 new ones?"

Jeff: "Yea.. I did my best. I'll email you the BPEL and WSDL's"

Bob: "Better yet.. just check your process into version control and I'll pick it up there"

Jeff: "Good thinking. Will do. How long do you think it will take for you to turn around the implementations?"

Bob: "Jeff, I have no idea - I haven't even seen the WSDL's yet! Let me take a look and I'll get back to you..."

Jeff: "Understood. I'm going to move on to the Replenish Inventory process."

Bob: "Sounds good - I should have estimates in a few hours."

This conversation - and this style probably already feel familiar. The one thing that is improved is that in many cases, the process contract will become a new deliverable in the development cycle. It will force a more structured deliverable to come out of the late analysis or early deisign stage and may likely result in a more timely delivery of the end product.

This concept was extended with WSDL. The extensions included: defining the interface in a language neutral manner, allowing for multiple bindings between interface and implementation, allowing for a more message-centric design of parameters / arguments, strong support for remote calls and declaration of quality attributes as declarative policies (reliability, integrity, etc.)

In the process world, we continue to leverage the 'design by contract' mentality. Languages like BPEL expose themselves to the outside world through WSDL. This means that every process IS A service with a contract. In addition, BPEL makes all external calls VIA web services. Thus, all calls are first described via the contract.

As we continue to find best practices in designing BPEL code, we have tested a variety of means to get from concept to delivery. The one that seems to be getting the most ground is what we are calling 'process contracts'. Here, the analyst takes on a 'RAD' view of the process. The goal isn't to get all the detail right on the first pass, but rather to quickly identify all of the service calls that need to be made to compose the process. You can think of this almost like the old CRC exercises (Classes, Responsiblity, Collaboration) - except replace 'classes' with 'service calls'.

One thing that is becoming apparent is that people want to use BPEL in different ways. I usually put the usage scenarios into one of three different buckets: B2B, A2A and composite applications. In the B2B and the A2A scenario, it is common for the endpoints to already exist, but the contract to the service (type, message, operations) still needs to be created. In the 'composite apps' space, it is common for neither an interface nor an implementation to exist. In any scenario, the designer finds that they quickly need to begin creating the interfaces or contracts.

We are finding that most people are comfortable building out a complete process by 'stubbing' out the process. This involves identifying all of the major steps (service calls) and the flow/logic between the steps. At this point, the designer isn't going into any detail about the step, just putting boundaries around 'what it does'. The designer then goes back and starts to add detail around the contract of each call. This involves detailing out the WSDL interface (not binding and service). Here the designer begins to look at using common messages and types for consistent service calls. As the designer prepares the calls for invocation, he/she will usually begin to identify if the scope of the call is correct. This is a byproduct of identifying the 'fattiness' of the message, the 'chattiness' of the calls, etc.

All of this is happening up front - meaning it is happening before we begin to 'program in the small' via your favorite language to program services: Java, C#, etc. Upon completion of the process contract, you will have identified:

1. The interface to the process itself

2. The division of labor between the services

3. The interface for each service called

4. Verification that the data needed to call each service is available to the service (var scopes)

It is at this point where you can begin working with your service implementer (java guy, etc.). Here is how the conversation goes:

Jeff: "Hey Bob, I just finished my process contract for the new Fulfill Order process."

Bob: "Cool. How many service calls?"

Jeff: "Well, there were a total of 8 calls. 3 of them were canned and I will be sucking them out of UDDI, but 5 of them are new."

Bob: "Did you stub out the 5 new ones?"

Jeff: "Yea.. I did my best. I'll email you the BPEL and WSDL's"

Bob: "Better yet.. just check your process into version control and I'll pick it up there"

Jeff: "Good thinking. Will do. How long do you think it will take for you to turn around the implementations?"

Bob: "Jeff, I have no idea - I haven't even seen the WSDL's yet! Let me take a look and I'll get back to you..."

Jeff: "Understood. I'm going to move on to the Replenish Inventory process."

Bob: "Sounds good - I should have estimates in a few hours."

This conversation - and this style probably already feel familiar. The one thing that is improved is that in many cases, the process contract will become a new deliverable in the development cycle. It will force a more structured deliverable to come out of the late analysis or early deisign stage and may likely result in a more timely delivery of the end product.

Thursday, January 29, 2004

Structured Process Cases

I've been playing with variations of the "Use Case" that are more process-centric and contract oriented. Overall, I think there is a good match between capturing process descriptions from an analysis perspective and tying that information back to the BPEL.

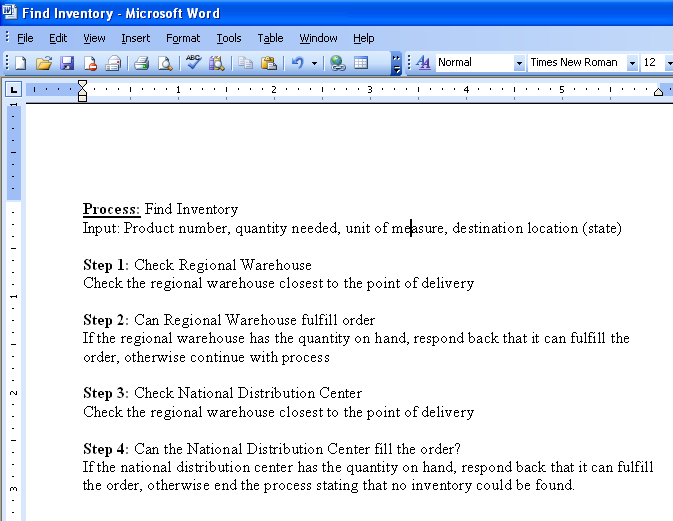

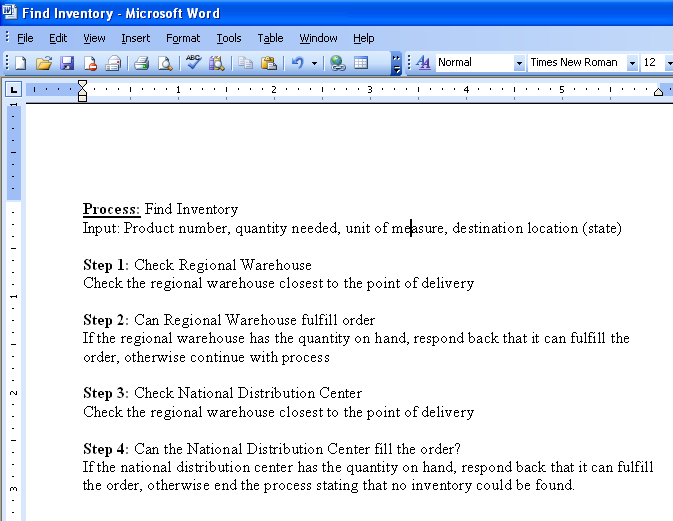

Although this is a very incomplete example, consider the following. A Process Analyst sits in front of their favorite tool (Microsoft Word) and writes down the name of the process, a small description and a handful of steps:

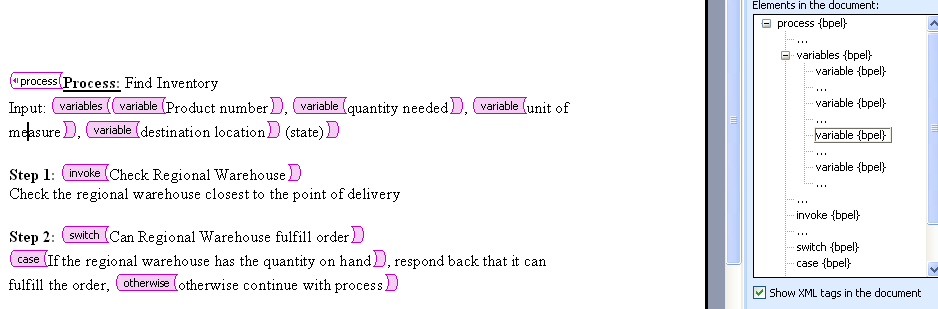

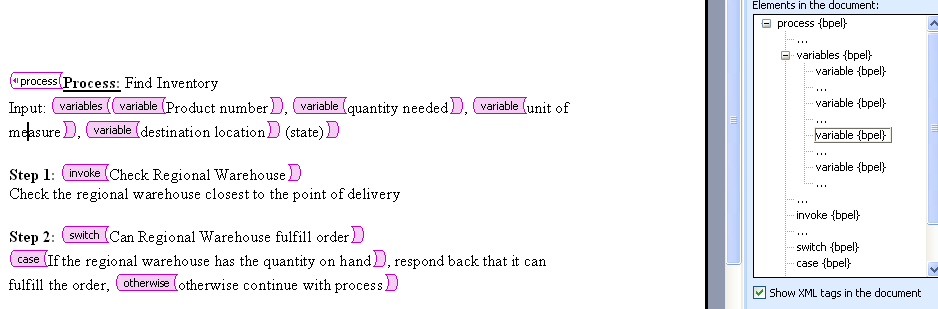

Now, the analyst adds a schema (or two) to the Word 2003 document by pulling up the "Tools: Templates and Add-Ins" window. In the example I'm using, they are adding in the BPEL schema but it might likely be a higher-level (more abstract) grammar that they choose.

Now, the analyst goes back to the document and "paints structure" into the content. This assumes that the analyst is given some extra training in such a process (think UML like training, "Process Case").

After painting the structure around the Process Case, the user is able 'hide xml' so that it looks like a normal requirements document. The analyst then takes the 'requirements' and sends it off to development. But, since it was 'painted' with a well known schema, the developer merely uploads the requirements document directly into their orchestration tool which stubs out the entire process for them. Now, of course there will be mismatches in syntax and scope, but the developer will make the changes and forward back the structured document for approval to the analyst.

At the same time, the developer will be creating a vocabulary of services. These will be marked up in WSDL (service:operation). Imagine terms like "checkInventory", "fillOrder", etc. These terms will then be made available to the analyst to drop into their future requirements documentation. By repeating this process, the links between analysis and design will continue to grow in strength.

This is still an early concept but our early tests indicate that we can significantly reduce development / integration costs by using the aforementioned closed-loop mechanism.

Although this is a very incomplete example, consider the following. A Process Analyst sits in front of their favorite tool (Microsoft Word) and writes down the name of the process, a small description and a handful of steps:

Now, the analyst adds a schema (or two) to the Word 2003 document by pulling up the "Tools: Templates and Add-Ins" window. In the example I'm using, they are adding in the BPEL schema but it might likely be a higher-level (more abstract) grammar that they choose.

Now, the analyst goes back to the document and "paints structure" into the content. This assumes that the analyst is given some extra training in such a process (think UML like training, "Process Case").

After painting the structure around the Process Case, the user is able 'hide xml' so that it looks like a normal requirements document. The analyst then takes the 'requirements' and sends it off to development. But, since it was 'painted' with a well known schema, the developer merely uploads the requirements document directly into their orchestration tool which stubs out the entire process for them. Now, of course there will be mismatches in syntax and scope, but the developer will make the changes and forward back the structured document for approval to the analyst.

At the same time, the developer will be creating a vocabulary of services. These will be marked up in WSDL (service:operation). Imagine terms like "checkInventory", "fillOrder", etc. These terms will then be made available to the analyst to drop into their future requirements documentation. By repeating this process, the links between analysis and design will continue to grow in strength.

This is still an early concept but our early tests indicate that we can significantly reduce development / integration costs by using the aforementioned closed-loop mechanism.

A first peek...

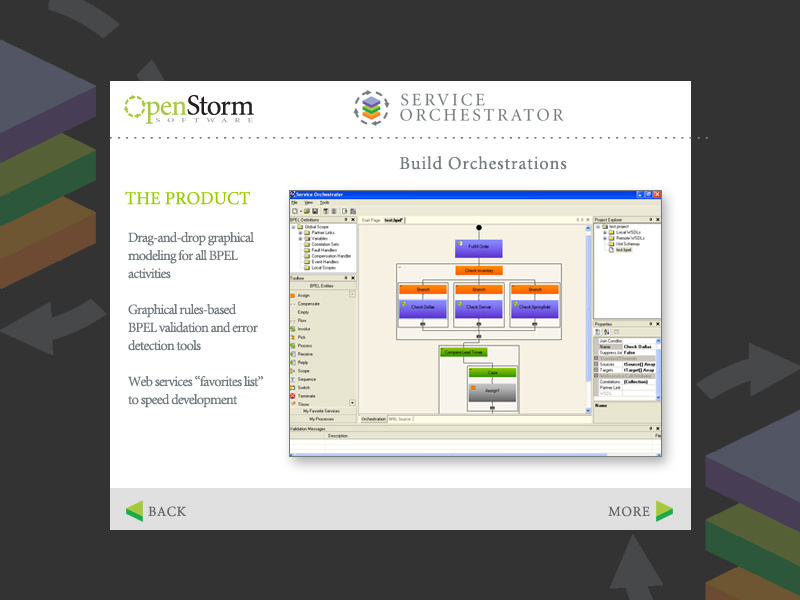

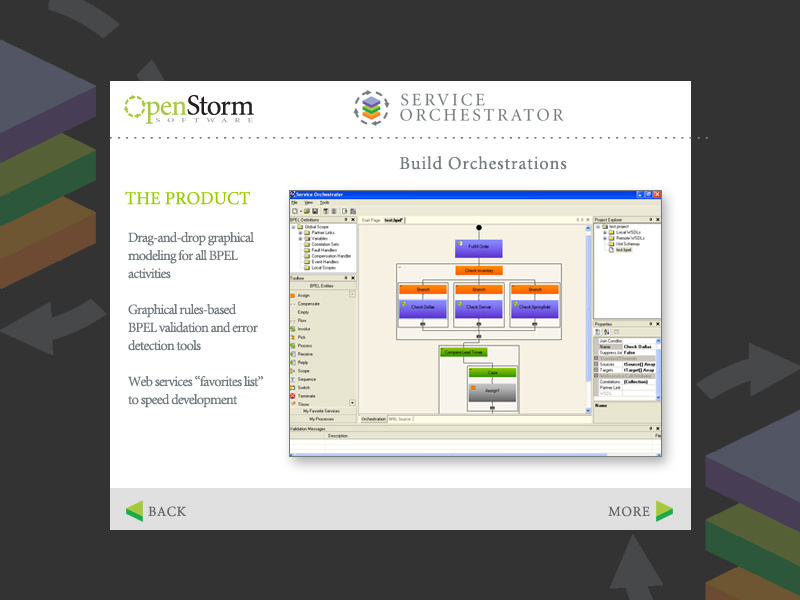

In the last few months, I've had hundreds of inquiries about the next version of the OpenStorm orchestration product. And although we are still in limited beta of the 2.0 release, I thought I'd share a single slide from the new deck.

The new product has a great feel to it, with some really interesting features. This is a shot of the main orchestration canvas, where you paint your process flow.

I'll post a bit more later on the product. Thanks to all of the people who have guided us through the endeavor and worked with us on the feature / function tradeoffs!

The new product has a great feel to it, with some really interesting features. This is a shot of the main orchestration canvas, where you paint your process flow.

I'll post a bit more later on the product. Thanks to all of the people who have guided us through the endeavor and worked with us on the feature / function tradeoffs!

Friday, January 23, 2004

Does the Service Fabric make the SOA Obsolete?

I had an interesting question posed to me recently: Does the service fabric make the SOA pattern obsolete?

First, be clear. I have a very clear definition for the SOA:

1. It is an architectural pattern

2. It uses 3 actors (directory, consumer and producer)

3. Whereby, the consumer looks up an interface to a producer in the directory

4. The consumer creates a binding to the producer and calls it

The SOA pattern allows for dynamic lookup and binding, which means that it is a vehicle for finding the *right* implementation of a service at runtime. The service fabric often plays a similar role, but it often does it using routers. In this case, the fabric is aware of the implementations of a given service and when a message is sent to it, it can dynamically send the message to the *right* implementation. So, both techniques allow for a message to be dynamically delivered to a destination. But, does one make the other obsolete?

In my opinion, the answer is, "no". I am of the opinion that people will often mix the techniques. In essence, the service call will still be designed to do a UDDI style lookup, but the destination may likely point at a router. Alternatively, developers will continue to write services and populate the descriptions in a directory, then make their router aware of the directory. In this case, the directory is still being used, just by the router instead of the programmer.

First, be clear. I have a very clear definition for the SOA:

1. It is an architectural pattern

2. It uses 3 actors (directory, consumer and producer)

3. Whereby, the consumer looks up an interface to a producer in the directory

4. The consumer creates a binding to the producer and calls it

The SOA pattern allows for dynamic lookup and binding, which means that it is a vehicle for finding the *right* implementation of a service at runtime. The service fabric often plays a similar role, but it often does it using routers. In this case, the fabric is aware of the implementations of a given service and when a message is sent to it, it can dynamically send the message to the *right* implementation. So, both techniques allow for a message to be dynamically delivered to a destination. But, does one make the other obsolete?

In my opinion, the answer is, "no". I am of the opinion that people will often mix the techniques. In essence, the service call will still be designed to do a UDDI style lookup, but the destination may likely point at a router. Alternatively, developers will continue to write services and populate the descriptions in a directory, then make their router aware of the directory. In this case, the directory is still being used, just by the router instead of the programmer.

Wednesday, January 21, 2004

Let's Merge!

Did anyone else catch this?

What? I say what?

Can you imagine the CEO of J.P. Morgan Chase and the CEO of Bank One Corp. talking before their merger about web services?

"WHAT??? Bank One isn't using web services - THE DEAL IS OFF!!!"

What? I say what?

Can you imagine the CEO of J.P. Morgan Chase and the CEO of Bank One Corp. talking before their merger about web services?

"WHAT??? Bank One isn't using web services - THE DEAL IS OFF!!!"

Sunday, January 18, 2004

SeeBeyond does rush job?

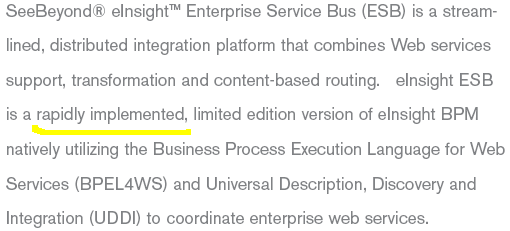

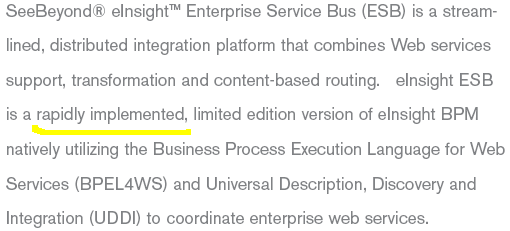

I found this in the new literature for the SeeBeyond ESB/BPEL implementation:

For some reason, the phrase "a rapidly implemented, limited edition version" doesn't make me feel all warm about the product....

Here is how I read through the lines:

1. Product Marketing didn't give Engineering time to do it right

2. Engineering is likely pissed, and let marketing know it

3. Marketing is OK with it, because they still can't figure out how to position the ESB against their more profitable lines

4. A 'limited edition version' will give marketing more time to think about what to do about the 'creative disruption' of SOI

For some reason, the phrase "a rapidly implemented, limited edition version" doesn't make me feel all warm about the product....

Here is how I read through the lines:

1. Product Marketing didn't give Engineering time to do it right

2. Engineering is likely pissed, and let marketing know it

3. Marketing is OK with it, because they still can't figure out how to position the ESB against their more profitable lines

4. A 'limited edition version' will give marketing more time to think about what to do about the 'creative disruption' of SOI

Wednesday, January 14, 2004

Features of a Service Oriented Language

Service Oriented, Protocol Connected, Message Based

Here are some casual thoughts on what I would like to see in a service oriented language. This is my first attempt at this... I'm sure I'll get some feedback ;-) and will update it.

Services

- The service is a first order concept, both sending and receiving

- Support for the strong interface (think WSDL)

- Mandatory support for Long Running Transactions

- Faults and Compensation are first order

- Protocols for transport and fulfillment of NFR are intentionally left out of scope

Messages

- Message is a first order concept

- Message is defined using a platform independent markup

- Support for message correlation properties; helpers for distributed state mgmt.

- Universal addressing scheme (assumes router)

Types / Vars

- Typing & vars are consistent with message system

- Remote variables (think REST)

Functional Containment

- The 'service' is a container (and managed)

- The 'object' continues to live. Objects are contained in services.

Invocation and Service Hosting

- WSDL's can be imported directly into the runtime. Operations and messages become first order citizens.

- Access & manipulation of binding / listener is first class.

- Language assumes that all systems are peer (both client and server)

Schema Manipulation

- DDL: Import / export / create / modify (consistent with type system)

Data Manipulation

- DML: transform (consistent with type system)

Flow Control

- Usual branching & looping

- Parallel execution; parallel joins (first order)

- Forced sequential processing

Event Based

- Events are a first order concept

- Time & activity based events

Metadata Ready

- Declarative metadata becomes a first order item (see jdk1.5)

- Service oriented loading / unloading of metadata / models / gen’d code at runtime

- Reflective knowledge of declarative non-functional polices (think ws-policy access)

Base Service & Extensions

- Concept of an extendable base service

- Service may have multiple interfaces

- Aspects may easily be applied to service

- Language is extended via ‘more services’ not ‘more syntax’

Service Network Awareness

- Service is aware of the network that it lives in (topology, routers, etc.)

- Service is aware of service-enabled remedies to non functional requirements (Virtualization, etc.)

- Service has the ability to modify the service network at runtime

OK. So a good question is, "which of these are part of the 'language', which are 'service libraries' or other?" I'm in favor of the *least* amount of required syntax possible. But, I like the idea of having *mandatory* service library extensions.

Here are some casual thoughts on what I would like to see in a service oriented language. This is my first attempt at this... I'm sure I'll get some feedback ;-) and will update it.

Services

- The service is a first order concept, both sending and receiving

- Support for the strong interface (think WSDL)

- Mandatory support for Long Running Transactions

- Faults and Compensation are first order

- Protocols for transport and fulfillment of NFR are intentionally left out of scope

Messages

- Message is a first order concept

- Message is defined using a platform independent markup

- Support for message correlation properties; helpers for distributed state mgmt.

- Universal addressing scheme (assumes router)

Types / Vars

- Typing & vars are consistent with message system

- Remote variables (think REST)

Functional Containment

- The 'service' is a container (and managed)

- The 'object' continues to live. Objects are contained in services.

Invocation and Service Hosting

- WSDL's can be imported directly into the runtime. Operations and messages become first order citizens.

- Access & manipulation of binding / listener is first class.

- Language assumes that all systems are peer (both client and server)

Schema Manipulation

- DDL: Import / export / create / modify (consistent with type system)

Data Manipulation

- DML: transform (consistent with type system)

Flow Control

- Usual branching & looping

- Parallel execution; parallel joins (first order)

- Forced sequential processing

Event Based

- Events are a first order concept

- Time & activity based events

Metadata Ready

- Declarative metadata becomes a first order item (see jdk1.5)

- Service oriented loading / unloading of metadata / models / gen’d code at runtime

- Reflective knowledge of declarative non-functional polices (think ws-policy access)

Base Service & Extensions

- Concept of an extendable base service

- Service may have multiple interfaces

- Aspects may easily be applied to service

- Language is extended via ‘more services’ not ‘more syntax’

Service Network Awareness

- Service is aware of the network that it lives in (topology, routers, etc.)

- Service is aware of service-enabled remedies to non functional requirements (Virtualization, etc.)

- Service has the ability to modify the service network at runtime

OK. So a good question is, "which of these are part of the 'language', which are 'service libraries' or other?" I'm in favor of the *least* amount of required syntax possible. But, I like the idea of having *mandatory* service library extensions.

Sunday, January 11, 2004

Clarification on Orchestration

In a recent ZapThink article, which has an amazingly similar name as my blog.... ;-)

I found this:

Ok. Pretty close... but I just want to clarify a bit.

First generation orchestrations are typically used as a process-integration or a system-to-system integration mechanism. This means that the orchestrations are VERY message oriented and the granularity between the operations are almost always coarse-grained.

In addition, there is a logical difference between "service orchestration" and "process orchestration". Typically, service integration is lower level. It involves the many calls to "technical services" and attempts to hide higher layer calls from the ugly technical details and are often exposed as a single business service. "Process orchestrations" are orchestrations that occur at a higher level. In these cases, virtually all of the calls are to other 'processes' and tie together a digital business process.

Now - in the future (2005+), I expect to see more fine-grained web services being used in 'composition' tools (a close cousin to orchestration). In this setting, more emphasis will be placed on in-lined services and performant service compositions. But for now, this is outside of the scope of what we typically classify as 'orchestration' or 'choreography'.

I found this:

Ok. Pretty close... but I just want to clarify a bit.

First generation orchestrations are typically used as a process-integration or a system-to-system integration mechanism. This means that the orchestrations are VERY message oriented and the granularity between the operations are almost always coarse-grained.

In addition, there is a logical difference between "service orchestration" and "process orchestration". Typically, service integration is lower level. It involves the many calls to "technical services" and attempts to hide higher layer calls from the ugly technical details and are often exposed as a single business service. "Process orchestrations" are orchestrations that occur at a higher level. In these cases, virtually all of the calls are to other 'processes' and tie together a digital business process.

Now - in the future (2005+), I expect to see more fine-grained web services being used in 'composition' tools (a close cousin to orchestration). In this setting, more emphasis will be placed on in-lined services and performant service compositions. But for now, this is outside of the scope of what we typically classify as 'orchestration' or 'choreography'.

Friday, January 09, 2004

[BlogService]

[BlogService]

public class HelloWorldService

{

public String HelloWorld(String data)

{

return "Hello World! You sent the string '" + data + "'.";

}

}

=====================

Here is what I want to do:

1. Post source code (java, c#, etc.) on a new kind of blogging engine

2. Have the blogging engine compile my code and turn it into a web service

3. Host the service for execution (with wsdl retrieval)

Done.

Think Apache Axis (with .jws features) meets a blogging engine.

public class HelloWorldService

{

public String HelloWorld(String data)

{

return "Hello World! You sent the string '" + data + "'.";

}

}

=====================

Here is what I want to do:

1. Post source code (java, c#, etc.) on a new kind of blogging engine

2. Have the blogging engine compile my code and turn it into a web service

3. Host the service for execution (with wsdl retrieval)

Done.

Think Apache Axis (with .jws features) meets a blogging engine.

Thursday, January 08, 2004

Automated Contextual and Conceptual Engineering

I just ran across something I wrote a few years ago... always interesting to look back at old notes...

Automated Contextual and Conceptual Engineering

I am attempting to convey a difficult concept to readers - right now. As I write this, my Microsoft Office is checking the spelling and grammar. It is putting my words and sentences into a context and using pre-defined rules to suggest areas of syntactic improvement. Dare I say it is using simple artificial intelligence (heuristics and a knowledge base) to improve my writing.

Visionaries have been promoting the concept of the Semantic Web for some time. By putting my words, sentences and paragraphs into context, the author is able to work with the software in a more advanced manner. If my word processor knew that I was writing a research paper on 'Automated Contextual and Conceptual Engineering', it could begin acting like an automated research assistant, scanning the web for applicable articles or illustrations, followed by suggestions on document structure, content, automated bibliographies, and footnotes. The more my software knows about what I am trying to write the more help it can offer.

Pushing context engineering to the next level takes us to conceptual engineering. Here, software not only understands the context of what I write, but understands the base concepts via conceptual ontologies. Once the software understands a concept it is able to look at the attributes of the concept and begin substituting alternative values suggesting related concepts. We would probably refer to this process as the elicitation of cross-domain metaphors or analogies.

Have you ever met someone that was good at connecting the dots in a business, scientific or personal problem? Typically these people are good at applying metaphors to problems. The goal of automated contextual and conceptual engineering is to create better content in less time while educating the author as he or she develops the content.

The Web has made it easy for anyone, anywhere to publish information. Browsers, cell phones and web pads are making it easy for anyone to read published information anywhere. I believe that the progress that we have made in mass-authoring content, cross-site syndication and ubiquitous rendering has made the world better. I now believe that the time has come to begin making the content better.

This is still an interesting concept. With the advances in web services, MS Office using XML and gains in the semantic web, this kind of stuff may be closer than I originally thought.

Automated Contextual and Conceptual Engineering

I am attempting to convey a difficult concept to readers - right now. As I write this, my Microsoft Office is checking the spelling and grammar. It is putting my words and sentences into a context and using pre-defined rules to suggest areas of syntactic improvement. Dare I say it is using simple artificial intelligence (heuristics and a knowledge base) to improve my writing.

Visionaries have been promoting the concept of the Semantic Web for some time. By putting my words, sentences and paragraphs into context, the author is able to work with the software in a more advanced manner. If my word processor knew that I was writing a research paper on 'Automated Contextual and Conceptual Engineering', it could begin acting like an automated research assistant, scanning the web for applicable articles or illustrations, followed by suggestions on document structure, content, automated bibliographies, and footnotes. The more my software knows about what I am trying to write the more help it can offer.

Pushing context engineering to the next level takes us to conceptual engineering. Here, software not only understands the context of what I write, but understands the base concepts via conceptual ontologies. Once the software understands a concept it is able to look at the attributes of the concept and begin substituting alternative values suggesting related concepts. We would probably refer to this process as the elicitation of cross-domain metaphors or analogies.

Have you ever met someone that was good at connecting the dots in a business, scientific or personal problem? Typically these people are good at applying metaphors to problems. The goal of automated contextual and conceptual engineering is to create better content in less time while educating the author as he or she develops the content.

The Web has made it easy for anyone, anywhere to publish information. Browsers, cell phones and web pads are making it easy for anyone to read published information anywhere. I believe that the progress that we have made in mass-authoring content, cross-site syndication and ubiquitous rendering has made the world better. I now believe that the time has come to begin making the content better.

This is still an interesting concept. With the advances in web services, MS Office using XML and gains in the semantic web, this kind of stuff may be closer than I originally thought.

Wednesday, January 07, 2004

Things I'm Reading...

I'm in the middle of reading a few things. Here are the ones that seem interesting:

Short reads:

Steve Cook and Stuart Kent's OOPSLA report: The Tool Factory

Long reads:

Joe Armstrong's thesis: "Making reliable distributed systems in the presence of software errors"

Peter Van Roy's yet-to-be published book: "Concepts, Techniques, and Models of Computer Programming"

Short reads:

Steve Cook and Stuart Kent's OOPSLA report: The Tool Factory

Long reads:

Joe Armstrong's thesis: "Making reliable distributed systems in the presence of software errors"

Peter Van Roy's yet-to-be published book: "Concepts, Techniques, and Models of Computer Programming"

Tuesday, January 06, 2004

Blogging Terms

I'm going to break my number one rule about blogging: NEVER TALK ABOUT BLOGGING!

But, I read blogs - and there are a couple of things I've noticed...

BlogNosing

A variant of brown-nosing, this is the practice of always saying nice things about other people on your blog. Example: Bill did a great job of blah blah blah.. Bill always is right... blah, blah, blah. Don't get me wrong, often people will do good work and it should be acknowledged, but you know when you're BlogNosing...

Tightly Coupled Blogs

This is the practice of assuming that everyone reads your blog (and all of your friends blogs) every day and that in effect they have tuned into your mini-soap opera. I believe that Tightly Coupled Blogs were invented by Microsoft employees. Example: Don farted, then Gudge laughed, but that was cool because Tim and Dave from the Sicily project were walking by (in building 42) and it really didn't smell that bad... A person should be able to read a single entry in your blog - and it should be able to stand on its own. Long Running Conversations that carry Session and Identity between posts is a bad practice... don't worry, I won't do a Blog Coupling Index.

BlogSlapping

Although I didn't invent this... I do feel that I've mastered it ;-) This is the practice of calling someone out on something stupid that they've said/written. Quite frankly, I don't think there is enough BlogSlapping in the world today. In the near future, I'm considering having a special "BlogSlap Schneider Day", (one free pop shot) just to give everyone a little practice. :-) Damn, That will be FUN!

And no, these aren't 'blogging patterns'

But, I read blogs - and there are a couple of things I've noticed...

BlogNosing

A variant of brown-nosing, this is the practice of always saying nice things about other people on your blog. Example: Bill did a great job of blah blah blah.. Bill always is right... blah, blah, blah. Don't get me wrong, often people will do good work and it should be acknowledged, but you know when you're BlogNosing...

Tightly Coupled Blogs

This is the practice of assuming that everyone reads your blog (and all of your friends blogs) every day and that in effect they have tuned into your mini-soap opera. I believe that Tightly Coupled Blogs were invented by Microsoft employees. Example: Don farted, then Gudge laughed, but that was cool because Tim and Dave from the Sicily project were walking by (in building 42) and it really didn't smell that bad... A person should be able to read a single entry in your blog - and it should be able to stand on its own. Long Running Conversations that carry Session and Identity between posts is a bad practice... don't worry, I won't do a Blog Coupling Index.

BlogSlapping

Although I didn't invent this... I do feel that I've mastered it ;-) This is the practice of calling someone out on something stupid that they've said/written. Quite frankly, I don't think there is enough BlogSlapping in the world today. In the near future, I'm considering having a special "BlogSlap Schneider Day", (one free pop shot) just to give everyone a little practice. :-) Damn, That will be FUN!

And no, these aren't 'blogging patterns'

Sunday, January 04, 2004

I'm now "LinkedIn" - feel free to create a 'connection'.

https://www.linkedin.com/

I don't know how to post a link to myself, so for now, use my name and email from the main search page.

https://www.linkedin.com/

I don't know how to post a link to myself, so for now, use my name and email from the main search page.

Bob Martin Demonstrates His Knowledge on Web Services

Bob Martin, from Object Mentor decided to slam web services.

The reason I bring this up is because of how poorly he did it. Sure, there are plenty of ways to cut up web services, but unfortunately, many people still don't even have the basic concepts down. Even seasoned people like Bob Martin have such little knowledge on the subject that tend to confuse people, rather than actually making a valid point.

Bob says stuff like:

- it is rpc

- it is attached to http and we use it to get past firewalls

- it has a negative effect on coupling :-)

- it uses xml, which is "big, ugly and slow"

Ok, for those of you who are new to web services. It isn't rpc, it isn't attached to http, it has a positive effect on coupling and yes it uses xml as a typing system, which trades some performance for interoperability.

You know, I love when people have valid complaints against web services. There are plenty of them too. I'm reminded of the movie Roxanne with Steve Martin.

Do you remember the scene where he was in the bar and some big drunk called him, "Big Nose". And Steve Martin came back asking, "Big Nose? Is that the best you can do?" Then, Steve Martin went on to find 20 names for his big nose...

Hmm. Interesting. Maybe I should post "20 valid complaints about web services"... but, as always, I'll need your help! (valid complaints only, please... )

The reason I bring this up is because of how poorly he did it. Sure, there are plenty of ways to cut up web services, but unfortunately, many people still don't even have the basic concepts down. Even seasoned people like Bob Martin have such little knowledge on the subject that tend to confuse people, rather than actually making a valid point.

Bob says stuff like:

- it is rpc

- it is attached to http and we use it to get past firewalls

- it has a negative effect on coupling :-)

- it uses xml, which is "big, ugly and slow"

Ok, for those of you who are new to web services. It isn't rpc, it isn't attached to http, it has a positive effect on coupling and yes it uses xml as a typing system, which trades some performance for interoperability.

You know, I love when people have valid complaints against web services. There are plenty of them too. I'm reminded of the movie Roxanne with Steve Martin.

Do you remember the scene where he was in the bar and some big drunk called him, "Big Nose". And Steve Martin came back asking, "Big Nose? Is that the best you can do?" Then, Steve Martin went on to find 20 names for his big nose...

Hmm. Interesting. Maybe I should post "20 valid complaints about web services"... but, as always, I'll need your help! (valid complaints only, please... )

Saturday, January 03, 2004

You and Your Research

An excerpt from, "You and Your Research" by Dr. Richard Hamming:

Now, how did I come to do this study? At Los Alamos I was brought in to run the computing machines which other people had got going, so those scientists and physicists could get back to business. I saw I was a stooge. I saw that although physically I was the same, they were different. And to put the thing bluntly, I was envious. I wanted to know why they were so different from me. I saw Feynman up close. I saw Fermi and Teller. I saw Oppenheimer. I saw Hans Bethe: he was my boss. I saw quite a few very capable people. I became very interested in the difference between those who do and those who might have done...

Now, how did I come to do this study? At Los Alamos I was brought in to run the computing machines which other people had got going, so those scientists and physicists could get back to business. I saw I was a stooge. I saw that although physically I was the same, they were different. And to put the thing bluntly, I was envious. I wanted to know why they were so different from me. I saw Feynman up close. I saw Fermi and Teller. I saw Oppenheimer. I saw Hans Bethe: he was my boss. I saw quite a few very capable people. I became very interested in the difference between those who do and those who might have done...

The Microsoft Drug

I just ran across a great presentation from Todd Proebsting of Microsoft. The following statement seems to sum up the MS philosophy:

2004 Hot Technologies List

It is time once again for me to make my predictions about the hot new technologies for 2004. Dominating this years list are items related to web services and alternative programming styles. Perhaps this is a prediction list - or maybe it is just my personal wish list...

1. Programming Model Convergence - The convergence and interlacing of the various programming models will likely surface to the top spot in 2004. As software vendors and enterprise customers consider their service oriented architectures, object oriented systems, aspects, model-driven architectures, integrated development environments and the other programmer facing technologies, they will find an inconsistent mess of technologies. 2004 will be a year of cleaning up the mess, both for ISV's as well as for the enterprise architects.

2. RFID - Already a hot topic, RFID is quickly becoming the "Y2K" of 2004. With the US-DOD and Walmart mandating the use of the technology, we will see the price of the tags and equipment tumble, opening up opportunities for new cost-sensitive applications.

3. Service Fabric - 2003 saw the introduction and early adoption of this enterprise enabling technology. In 2004 we can expect to see the infrastructure of web service networks continue to unfold. Look for less emphasis on "web service management" (reactive software) and more emphasis on intelligent service fabrics that proactively resolve quality related issues. Also look for the ESB to continue to gain ground, but eventually to be rolled into a small handful of services that the fabric handles. Lastly, it is likely that the protocol vendors and the fabric-via-service vendors collide, with the winner being the group that manages to pull protocols and services into a single product line.

4. SIP-based Enterprise Messaging - Many advanced organizations currently use instant messaging as a core communication vehicle. However, mainstream business has not yet adopted the technology. I believe that 2004 will be a chasm-crossing year for IM in the enterprise. Corporations will likely bring IM servers in house for security reasons - eventually, it will be granted a similar role as email.

5. WS-* Rosetta Intermediary - As web services continue to be adopted, a new breed of protocol translation service will emerge. This service will act as an intermediary that resolves differences between protocols introduced by vendor one-offs, competing standards and versioning. This technology will have a similar role that the 'gateway' or 'bridge' had in early LAN environments, only it will focus on the web service protocols. (Note: this topic is not related to Rosetta-Net)

6. IP telephony - Although this is far from being a new technology, I am predicting that 2004 is an adoption year. The number of vendors offering the service has increased as well as the functionality of the implementations. We are also starting to see a market emerge for VOIP add-on products.

7. Independent Invocation Models - Most invocation models are platform / language specific. 2004 will be a breakthrough year where the invocation model is viewed as a platform independent artifact. Just as WSDL created a platform independent entity for describing the server side interface, we will see new entities created for describing the client side invocation scheme. Concepts from WSIF will be leveraged, but the mechanisms will be ages ahead of what are currently available.

8. Presentation Offerings - For the last several years, the browser has dominated as the primary presentation (UI) vehicle for applications. In 2003, a handful of startups and established players built early versions of alternative user interfaces. Look to 2004 to see a real fight for adoption of these next-gen user interfaces.

9. Advanced SOAP Foundations - Much of the work that has been accomplished in the SOAP space has laid a foundation for the normal use cases. 2004 will usher in more advanced uses of SOAP including multicast SOAP, in-proc soap, async soap, etc. In addition, we will see the ws-* specifications enter into the mainstream. In many cases, SOAP will start to be viewed as a semi-static, standalone document with editable fields that can be routed to a destination specified by its header.

10. Disconnected PM's - The Internet and the web has most programmers thinking in a 'connected-only' manner. However, with the release of SDO many Java programmers (in addition to their .Net ADO counterparts), will begin designing systems with disconnected programming models. This will eventually lead to XML encoded formats that leave a time based change history that will be leveraged by both the .Net and the J2EE platforms. This technology will re-introduce batch style off-loading of non-time sensitive data and force synchronization vendors to become compatible with the newer technologies, thus creating some level of interoperability in data synchronization.

One underlying theme that may be noted is that many of the 'hot technologies' are still at the conceptual level and many of them are buried deep in the technology stack. As packaged applications like SAP have matured, we are finding that completely new paradigms are needed to bring a new level of functionality. The technologies that were nurtured over the last couple of years and are slated for release in 2004 will offer new programming shifts and ultimately will lead to a whole new generation of applications.

1. Programming Model Convergence - The convergence and interlacing of the various programming models will likely surface to the top spot in 2004. As software vendors and enterprise customers consider their service oriented architectures, object oriented systems, aspects, model-driven architectures, integrated development environments and the other programmer facing technologies, they will find an inconsistent mess of technologies. 2004 will be a year of cleaning up the mess, both for ISV's as well as for the enterprise architects.

2. RFID - Already a hot topic, RFID is quickly becoming the "Y2K" of 2004. With the US-DOD and Walmart mandating the use of the technology, we will see the price of the tags and equipment tumble, opening up opportunities for new cost-sensitive applications.

3. Service Fabric - 2003 saw the introduction and early adoption of this enterprise enabling technology. In 2004 we can expect to see the infrastructure of web service networks continue to unfold. Look for less emphasis on "web service management" (reactive software) and more emphasis on intelligent service fabrics that proactively resolve quality related issues. Also look for the ESB to continue to gain ground, but eventually to be rolled into a small handful of services that the fabric handles. Lastly, it is likely that the protocol vendors and the fabric-via-service vendors collide, with the winner being the group that manages to pull protocols and services into a single product line.

4. SIP-based Enterprise Messaging - Many advanced organizations currently use instant messaging as a core communication vehicle. However, mainstream business has not yet adopted the technology. I believe that 2004 will be a chasm-crossing year for IM in the enterprise. Corporations will likely bring IM servers in house for security reasons - eventually, it will be granted a similar role as email.

5. WS-* Rosetta Intermediary - As web services continue to be adopted, a new breed of protocol translation service will emerge. This service will act as an intermediary that resolves differences between protocols introduced by vendor one-offs, competing standards and versioning. This technology will have a similar role that the 'gateway' or 'bridge' had in early LAN environments, only it will focus on the web service protocols. (Note: this topic is not related to Rosetta-Net)

6. IP telephony - Although this is far from being a new technology, I am predicting that 2004 is an adoption year. The number of vendors offering the service has increased as well as the functionality of the implementations. We are also starting to see a market emerge for VOIP add-on products.

7. Independent Invocation Models - Most invocation models are platform / language specific. 2004 will be a breakthrough year where the invocation model is viewed as a platform independent artifact. Just as WSDL created a platform independent entity for describing the server side interface, we will see new entities created for describing the client side invocation scheme. Concepts from WSIF will be leveraged, but the mechanisms will be ages ahead of what are currently available.

8. Presentation Offerings - For the last several years, the browser has dominated as the primary presentation (UI) vehicle for applications. In 2003, a handful of startups and established players built early versions of alternative user interfaces. Look to 2004 to see a real fight for adoption of these next-gen user interfaces.

9. Advanced SOAP Foundations - Much of the work that has been accomplished in the SOAP space has laid a foundation for the normal use cases. 2004 will usher in more advanced uses of SOAP including multicast SOAP, in-proc soap, async soap, etc. In addition, we will see the ws-* specifications enter into the mainstream. In many cases, SOAP will start to be viewed as a semi-static, standalone document with editable fields that can be routed to a destination specified by its header.

10. Disconnected PM's - The Internet and the web has most programmers thinking in a 'connected-only' manner. However, with the release of SDO many Java programmers (in addition to their .Net ADO counterparts), will begin designing systems with disconnected programming models. This will eventually lead to XML encoded formats that leave a time based change history that will be leveraged by both the .Net and the J2EE platforms. This technology will re-introduce batch style off-loading of non-time sensitive data and force synchronization vendors to become compatible with the newer technologies, thus creating some level of interoperability in data synchronization.

One underlying theme that may be noted is that many of the 'hot technologies' are still at the conceptual level and many of them are buried deep in the technology stack. As packaged applications like SAP have matured, we are finding that completely new paradigms are needed to bring a new level of functionality. The technologies that were nurtured over the last couple of years and are slated for release in 2004 will offer new programming shifts and ultimately will lead to a whole new generation of applications.

Subscribe to:

Comments (Atom)