Delivering Business Services through modern practices and technologies. -- Cloud, DevOps and As-a-Service.

Saturday, December 23, 2006

The Blog Tag

1. I constantly listen to music - favoring "classic punk rock" and gospel... an odd combination.

2. Before I got fat, I was a long distance runner and a racquetball instructor.

3. Initial funding for MomentumSI came from personal savings and a $40k loan from my mom (10k at a time). "Uhhh... Mom... any chance..."

4. I intend to write at least one more book - most likely on "strategy digitization".

5. I was a double major in Computer Science and Psychology with the intent to pursue artificial intelligence.

I know I'm supposed to tag 5 more people, but it's the holiday season... :-)

Wednesday, December 13, 2006

New Podcast on SAP and SOA

http://searchsap.techtarget.com/originalContent/0,289142,sid21_gci1234356,00.html

It's an excellent overview of the current state and future roadmap.

Friday, December 08, 2006

The Jon Udell Challenge

For Jon, I am happy - he deserves good things in life. For myself, I am sad. There is a part of me that enjoys going to read about Jon's latest geek adventure. I realize that I do so for one reason. He is so incredibly smart that it makes me feel stupid. That feeling of stupidity motivates me to learn more. I really hope that Jon doesn't become one of those mindless Microsoft snobs who views the world from a purely Microsoft perspective. This might sound insulting but I've lost too many friends to the Microsoft brain washing machine. It has taken down some good men.

That said, I am issuing a challenge to Jon:

1. Make a difference at Microsoft. Create a list of ten things that Microsoft has to change and then be ruthless in evangelizing what Microsoft must do to remedy their issues. Keep the list public and monitor the progress.

2. Eat your own dog food. If you are going to evangelize a new Microsoft technology, first show me how Microsoft uses it internally.

3. Be a good citizen. If you introduce a new concept, show me how it 'bridges cultures', as you mention in your podcast. The legacy of 'embrace and extend' will rightfully haunt Microsoft.

Congratulations to Jon for the new position. More important, congratulations to Microsoft for adding a team member that has the ability to actually make a difference.

Sunday, November 19, 2006

Linthicum on SOA Costs

Cost of Data Complexity = (((Number of Data Elements) * Complexity of the Data Storage Technology) * Labor Units))

Number of Data Elements being the number of semantics you?re tracking in your domain, new or derived.

Complexity of the Data Storage Technology, expressed as a percentage between 0 and 1 (0% to 100%). For instance, Relational is a .3, Object-Oriented is a .6, and ISAM is a .8.

So, at $100 a labor unit, or the amount of money it takes to understand and refine one data element, we could have:

Cost of Data Complexity = (((3,000) * .5) * $100)

Or, Cost of Data Complexity = $150,000 USD Or, the amount of money needed to both understand and refine the data so it fits into your SOA, which is a small part of the overall project by the way.

------------------

I can't speak for David - but I can promise you, this is not how we estimate SOA efforts. I'm not even sure what David is attempting to estimate. This post has me so confused... I'd recommend deleting that post - real soon.

Wednesday, November 15, 2006

SOA Governance

http://www.infoworld.com/video/archives/2006/11/jeff_schneider.html

Saturday, November 11, 2006

WebLayers Executes Asset Governance

Last week I saw the latest demo from WebLayers on their 'Policy Based Governance Suite'. The demo hit home for one simple reason. They've done a great job of laying out the extended SDLC from a roles / assets perspective and determining 'what' needs to be governed at each stage.

Most of the vendors in the space have approached the governance problem from a registry perspective which is an important aspect, but not exactly a holistic view. WebLayers takes a methodology / lifecycle perspective. Their tooling allows you to plug your own process with roles (Business Analysis, Application Architecture, Service Design, etc.) and identify 'what' needs to be governed in each area - then, define the policies for each asset or artifact.

Example: The Design Stage includes a "Service Designer"; this person creates a "WSDL"; and all WSDL's have a policy that "Namespaces must be used".

They do this by using an interceptor model. In essence, they've created a 'governance bus'. WebLayers provides intermediaries that sit between the asset creation tool (schema designer, IDE, etc.) and the repository that will store the asset (version control, CMDB, etc.) This allows their tool to inspect the newly created assets just after they've been created, but before they've been sent to production. The policies are applied to the assets and results displayed (pass, fail, etc.) to the author.

I've been calling this type of governance, "Asset Governance" because the emphasis in on looking at the final output that is created and determining if it complies with enterprise policies. IMHO, Asset Governance is an essential component of any SOA program that utilizes an offshore element ("WSDL is the Offshore Contract").

The product was lighter on the other two type of Governance that I look for: Process Governance and Portfolio Governance. We sum it up like this:

- Portfolio Governance focuses on finding right problem (prioritization)

- Process Governance focuses on ensuring that all the right steps are taken

- Asset Governance focuses on ensuring that the output of the steps were performed in accordance with policy

I talked with the WebLayers team about the other two types of governance and received feedback that traditional I.T. Governance & Project Management packages might solve the problem (see, http://www.niku.com/). Most of these vendors built their products prior to the SOA era and have not gone back and revisited the functionality. They have not killed the "application as the unit of work" and moved to "the service as the unit of work" nor have they updated ROI formulas based on "shared services" (thus reducing investment, increasing ROI).

IMHO, the SOA Governance space will eventually find a nice intersection that includes both classic I.T. Governance, and the more modern "asset & process governance". It will be interesting to see which of the vendors will have the courage to tackle the end-to-end governance problem.

Thursday, November 09, 2006

Rejected Four Years Ago...

------------------

Hi Jeff,

I sent your proposal to Sean Rhody for his review. At this time, we regret that we will be unable to accept this article for the magazine.

Gail

Gail, here is the abstract:

=====================================

“Semantic Web Services”

The desire for computers to easily communicate has long been a goal of both computer scientists and businessmen, the latter recognizing the financial gain of seamless systems integration. Over time, this goal has been recognized through network standards like Ethernet, TCP/IP and HTTP. More recently the standardization has moved up the protocol stack. Now, XML is being used to add structure through tagging, which facilitates concept delineation and enumeration. Web Services build on this foundation and enhance the communication through additional features including object serialization/deserialization (SOAP), service registries (UDDI) and standardized service interfaces (WSDL).

Yet even with these advances computers still aren’t aware of the meaning of the text that is being sent, nor are they able to make any reasonable inferences about the data. Tim Berner Lee and the W3C have been tackling this problem through an initiative dubbed the “Semantic Web”. This initiative uncovers the semantic meanings of transactions allowing companies to use a common dictionary and also enabling like terms to be disambiguated (“Automobile == Car”).

The use of the Semantic Web for concept delineation and Web Services for interoperability is enabling a new bread of applications known collectively as, “Semantic Web Services”. This article will explore the state of semantic ontologies, business grammars and emerging commercial products.

=====================================

Ok, the year was 2002, and I did refer to SOAP as an object serilization mechanism - perhaps it was appropriate for them to reject the article ;-) Now that we're approaching 2007 I believe that we'll start to hear more and more on this subject - who knows, maybe I'll resubmit the abstract!

Friday, October 20, 2006

CIO Paul Coby is Waiting for VHS

ROTFL.

Uh, Paul - the VHS version of SOA came out 5 years ago. And SOA isn't about the stupid standards - it's a way of integrating business and I.T.

Sunday, October 01, 2006

OASIS SOA-RM Passes

The ballots for approval of Reference Model for Service Oriented Architecture v1.0 as an OASIS Standard (announced at [1]) has closed. There were sufficient affirmative votes to approve the specification. However, because there was a negative vote, the SOA Reference Model Technical Committee must decide how to proceed, as provided in the OASIS TC Process, at http://www.oasis-open.org/committees/process.php#3.4. A further announcement will be made to this list regarding their disposition of the vote.

IMHO, the real value of this passing is that people can quit working on it. The RM is an abstract document that is used by professional conceptual RA developers (of which there are about 10 in the world). This particular standard was... very popular by the people who wrote it... and not so popular by everyone else.

Initially, MomentumSI was the sole No Vote, but we pulled the vote so that the committee could just put it to bed and move on. Unfortunately, someone else voted no saying that the RM was so generic that it served no purpose. Well, they do have an interesting point... here's an interesting test, see if Client/Server architecture passes the SOA litmus test as defined by OASIS... Hmmm....

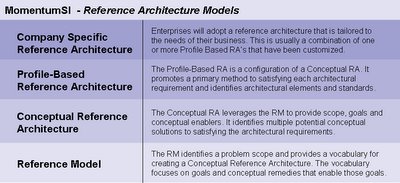

Again - 'architecture by committee' is a hard thing to do. I don't envy these guys. The next test that these guys have is to make up their mind on the RA. Will it be a Conceptual RA or a Profile Based RA?

Saturday, September 23, 2006

Reference Architecture Models

With the OASIS RM 1.0 up for vote, I've found myself discussing RM/RA vocabulary again... I found a Momentum view of how we differentiate between views and their usage.

I've heard people tell me that the OASIS Reference Model has not been helpful in creating their Customer Specific Reference Architecture. Well, that doesn't surprise me - it wasn't meant to be used in that way. A reference model establishes a scope, goals and a vocabulary of abstract concepts. It is used by professional Conceptual RA developers.

In RA land there is a process of moving from very abstract to somewhat concrete. It is an evolutionary process. However, if you skip layers you'll probably find yourself confused. Professionals from OASIS have informed me that they've lost some contributors in the process of creating their specification. It doesn't surprise me - RM's are theoretical work and not for everyone. I have also been told that they intend to publish a users guide which hopefully will set the stage for its use patterns and anti-patterns.

Sunday, September 17, 2006

Inside-out or Outside-in

Sun created a platform when they wrote an aggregated set of specifications. They made their specifications API centric. It was an inside-out view. The next generation platform must be an outside-in view centered on protocols, formats, identifiers and service descriptions. It MUST be written using RFC 2119 format.

SOA, BPM, AJAX, etc. require a new integrated outside-in standardized platform. Until then, expect limited adoption or alternatively, enterprise rework.

SOA Acquisitions (revised list)

http://www.momentumsi.com/SOA_Acquisitions.html

A few interesting notes:

1. I couldn't be more disappointed in Cisco and their lack of acquisitions. AON failed (past tense). This could go down as one of the biggest blunders in software / hardware history. IMHO, Cisco should have revisited their executive leadership around AON a long time ago.

2. I've taken Service Integrity off the list. It appears as though they've shut down and didn't move the IP. This is disappointing as well - from what I've heard, many of the SOA ISV's were never even notified that the IP was up for sale.

3. WebMethods acquired Infravio. HP/Mercury/Systinet couldn't be happier. WEBM stock has been in the gutter for a long time. Infravio has a great product and a great team. It will be interesting to see if WEBM realizes that they need to move aside and let the Infravio team run their SOA direction.

4. I'm keeping SOA Software on both 'buy side' and 'sell side'. These guys have put together a pretty interesting package that keeps them in the pure play SOA infrastructure space. It's too clean. Someone one will grab them.

5. I added a couple 'client-side' guys to the list yet: ActiveGrid and AboveAll Software.

6. I added RogueWave (strong SCA/SDO story), I will proabably add some more SDO / data service providers in the near future.

7. I added testing specialists iTKO and Parasoft (although I don't know who will buy them).

8. I added Logic Library. With the Flashline acquisition, these guys become an obvious target.

Shai on Enterprise SOA

http://www.sda-asia.com/sda/features/psecom,id,595,srn,2,nodeid,4,_language,Singapore.html

Saturday, September 16, 2006

BPM 2.1.4.7.3.5.7.43.2.6.8.9.4.3.5.8.4.1

http://www.looselycoupled.com/opinion/2003/schnei-bp0929.html

So - I hate the tag line BPM 2.0; it's so 2003. Ok, shame on me.

Here's why...it isn't about the driving the software solution from a single angle. It isn't about the PROCESS. It isn't about the USER INTERFACE. It isn't about the SERVICES. It isn't about MODEL DRIVEN. It's about integrating all of these concepts without your head exploding.

BPM 2.0 places too much emphasis on the process.

Web 2.0 places too much emphasis on the UI and social aspects.

UML 2.0 ;-) places too much emphasis on making models.

SOA 2.0 places too much emphasis on the services.

Entperise 2.0 places too much emphasis on... hell, being a marketing term.

Did I forget any?

Design Time Agility over Runtime Performance Cost

On occasion architects will get lucky and find new technologies and approaches that are not conflicting. It has been my experience that this is the exception not the norm. More common is the need to resolve competing interests such as 'agility' or 'performance'. And when they do compete, it is the job of the architect to give guidance.

The services found in your environment will likely be victim to two primary performance degrading elements:

1. They will be remote and will fall victim to all of the performance issues associated with distributed computing

2. They will likely use a fat stack to describe the services, like the WS-I Basic Profile.

Now that we've described the performance issues we have to ask ourselves, "will the system perform worse?" And the answer is, "not necessarily". You see, for the last few decades we've been making our software systems more agile from a design/develop perspective. When we went from C to C++ we took a performance/cost hit. When we went to virtual machines we took a hit. When we moved to fully managed containers we took a hit. And when we move to distributed web services we will take another hit. This is intentional.

A fundamental notion that I.T. embraces is that we must increase developer productivity to enable "development at the speed of business". The new abstraction layers that enable us to increase developer agility have a cost - and that cost is system performance. However, There is no need to say that it is an "agility over performance" issue; rather, it is a "system agility over performance cost" issue. By this I mean we can continue to see the same levels of runtime performance, but it will cost us more in terms of hardware (and other performance increasing techniques). Warning: This principle isn't a license to go write fat-bloated code. Balancing design time agility and runtime performance cost is a delicate matter. Many I.T. shops have implicitly embraced the opposite view (Runtime Performance Cost over Design Time Agility). These shops must rethink their core architectural value system.

Summary: The principle is, "Design Time Agility over Runtime Performance Cost". This means that with SOA,

1. You should expect your time-to-deliver index to get better

2. You should not expect runtime performance to get worse. Instead, you should plan on resolving the performance issues.

3. You should expect (performance/cost) to go down

Tuesday, September 12, 2006

MomentumSI on Reuse

http://www.momentumsi.com/SOA_Reuse.html

Feel free to use (or reuse) the graphics! If you choose not to REUSE the graphics you can SHARE this page by passing someone a link ;-)

Monday, September 11, 2006

Lessons from Planet Sabre

Planet Sabre was an application that focused on the needs of the Travel Agent. It allowed an agent to book flights, cars, hotels, etc. As you might imagine, these booking activities look quite similar to ones that might be done over the web (Travelocity) or through the internal call center. Hence, they were great candidates for services (they had a good client-to-service ratio).

I was assigned as the chief architect over a team of about 30 designers and developers. (BTW, I was like the 4th chief architect on the project). The developers were pissed that they received yet-another architect to 'help them out'. Regardless, they were good sports and we worked together nicely.

At Sabre, the services were mostly written in TPF (think Assembler) and client developers were given a client side library (think CLI). The service development group owned the services (funded, maintained and supported users). They worked off a shared schedule - requests came in, they prioritized them and knocked them out as they could.

The (consuming) application development groups would receive a list of services that were available as well as availability estimates for new services and changes to existing services. All services were available from a 'test' system for us to develop off of.

So, what were the issues?

The reason why the project was considered 'distressed' was due to poor performance. Sounds simple, eh? Surely the services were missing their SLA's, right? Wrong. The services were measured on their ability to process the request and to send the result back over the wire to the client. Here, the system performed according to SLA's. The issue that we hit was that the client machine was very slow, the client side VM and payload-parser were slow as was the connection to the box (often a modem).

We saw poor performance because the service designers assumed that the network wouldn't be the bottleneck, nor would the client side parser - both incorrect. The response messages from the service were fatty and the deserialization was too complex causing the client system to perform poorly. In addition, the client application would perform 'eager acquisition' of data to increase performance. This was a fine strategy except it would cause 'random' CPU spikes where an all-or-nothing download of data would occur (despite our best attempts to manipulate threads). From our point of view, we needed the equivalent of the 'database cursor' to more accurately control the streaming of data back to the client.

Lesson: Client / consumer capabilities will vary significantly. Understand the potential bottlenecks and design your services accordingly. Common remedies include streaming with throttling, box-carring, multi-granular message formats, cursors and efficient client side libraries.

The second lesson was more 'organizational' in nature. The 'shared service group' provided us with about 85% of all of the services we would need. For the remaining 15% we had two options - ask the shared services group to build them - or build them on our own. The last 15% weren't really that reusable - and in some cases were application specific - but they just didn't belong in the client. So, who builds them? In our case, we did. The thing is, we had hired a bunch of UI guys (in this case Java Swing), who weren't trained in designing services. They did their best - but, you get what you pay for. The next question was, who maintains the services we built? Could we move them to the shared services group? Well, we didn't know how to program in TPF so we built them in Java. The shared services group was not equipped to maintain our services so we did. No big deal - but now it's time to move the services into production. The shared services group had a great process for managing the deployment and operational processes around services that THEY built. But what about ours? Doh!

Lesson: New services will be found on projects and in some cases they will be 'non-shared'. Understand who will build them, who will maintain them and how they will be supported in a production environment.

Planet Sabre had some SOA issues, but all in all I found the style quite successful. When people ask me who is the most advanced SOA shop, I'll still say Sabre. They hit issues but stuck with it and figured it out. The project I discussed happened almost 10 years ago yet I see the same issues at clients today.

Lesson: SOA takes time to figure out. Once you do, you'll never, ever, ever go back. If you're SOA effort has already been deemed a failure it only means that your organization didn't have the leadership to 'do something hard'. Replace them.

Thursday, August 31, 2006

Supply Side and Demand Side SOA

Most of the companies that I've consulted to start with a 'supply side SOA strategy'. That is, they create a strategy to create a supply of services. As everyone in the manufacturing world knows, creating supply without demand is a really bad thing. Inventory that is not used is considered bad for a few reasons, the primary ones being:

1. You prematurely spent your money

2. As inventory ages, new demand-side requirements will be generated causing the current inventory to become outdated

Most manufacturing companies have moved to some variation of just-in-time production. They wait for customer demand before they build the products. You'd think that this would work for SOA but in many companies it isn't. The reason is simple. These companies do not have a demand-side generator (the sales and marketing engines). Demand-side SOA is a discipline that doesn't exist in many corporations.

Demand-side SOA requires a change in philosophy and process. For starters, you have to begin thinking about all of your services as products or SKU's. Products must be managed in a product portfolio. Products must be marketed to potential 'buyers'. Demand side application builders should have well defined processes to shop for SKU's with cross-selling capabilities. We need to kill the 'service librarian' and replace it with the 'service shopping assistant'. Obviously, we have to quit thinking in terms of 'registries'; 'catalogs' are better - but 'shopping carts' are probably even closer to target.

Demand-side SOA is currently at an infancy. Our ISV vendor community has largely failed us to date but it is only a matter of time before they catch up. In the mean time, it is the responsibility of the I.T. organization to begin changing philosophies and processes to think in terms of supply and demand economics.

Tuesday, August 29, 2006

Forking Web 2.0

Seriously. I have no need for Web 2.0 as it is being defined.

A few months ago I attended the MS Web 2.0/SOA think & posture event where a bunch of smart people overloaded the term Web 2.0 to meet their own needs, myself included. I almost forgot about the event until I caught a post by Gregor Hohpe (who impresses the hell out of me). Gregor attended yet another 'what the hell is Web 2.0' event and blogged about some attributes and tenets:

I'm convinced that Gregor is a freakin genius, so I really doubt if he missed the conclusion. In fact, the thinking was very similar to the Spark conference so I'm not surprised.

What just occurred to me is Web 2.0, as the world defines it, bores the living hell out of me. It's simple - I don't work for a consumer company like Amazon, Google or Yahoo - and as I look across my clients most of them don't need Web 2.0 functionality (as people are defining it).

What do I need? How do I want to overload the term? Easy. I am a SOA dude. I need a bad ass client framework for my services. I was hoping that the Web 2.0 guys were going to create a new client model - but they aren't. They're creating a social computing model - good for them, but it doesn't meet my needs. I need... a Collaborative Composite Client Platform.

What's are the characteristics of a CCCP?

1. Obviously, it's client-side, asynchronous, message/service oriented and highly interactive.

2. It's designed for the Web but merges the application and document paradigm successfully (like http://finance.gooogle.com)

3. The componentized UI is self describing and viewable by a user (think 'view source' meets 'portlets') .

4. The services called by the clients can be identified and reused. If a user doesn't want the UI they should be able to identify the services the client calls and use them instead.

5. Collaboration is a core tenet, not a feature .

6. Services are pre-compiled and available as-is however, client compositions can be changed at runtime and the new configuration can be permanently saved (typical with modern portals).

If the Web 2.0 guys create their manifesto and it's a bunch of e-tail crap where the customer is king - I'm out. My interests are in creating a new programming model for the client that serves as a foundation for any domain. It's time to fork Web 2.0.

Sunday, August 27, 2006

Decoupling the Client and Service Platforms

The SOA programs that I've seen stall out typically were because they failed to identify the composite applications that would consume the services. It sounds rather obvious - but it isn't about building or buying services. Value is created when business people use clients that leverage the services. I guess that's one of the reasons why I always try to call this paradigm 'Client-Service Computing', rather than SOA.

Recently I reviewed a few enterprise SOA reference architectures and noticed an unpleasant pattern. Architects were forgetting to put the 'client' on the architecture. I know - sounds silly. Really smart architects get so caught up in identifying the patterns, domains, interactions, practices and standards associated with services that they forget about the clients!

So - we have clients and services... and we decoupled them. I'll say it again - we decoupled them! This takes me to my next point. For legacy reasons architects are continuing to insist that the client platforms be tightly aligned to the service platforms (.Net on both, etc.) This is non-sense.

Many of the last generation client platforms were not optimized for service oriented computing. By this I mean that they don't easily accommodate the Web Service standards nor do they embrace 'contract first design' and in general - many of them just plain stink. The reason we use them is because analysts like Gartner told us to go with a single platform. It's time to decouple the client and the service platforms. The client platforms should be optimized around UI capabilities including collaboration and human-computer-interactions. This might mean using a strong Web 2.0 platform. My bottom line is that there is no need to continue building UI's using the same ole platforms. It's time to optimize for this computing paradigm.

Thursday, August 24, 2006

5 Years and 1 Hour

It occurred to me that it really took me 5 years and 1 hour. It took 5 years of research and thinking about SOA and one hour to write it down.

Sometimes I cringe when I hear enterprise customers say that they're going to do their SOA effort internally and not learn lessons from people who've already put in the 5 years.

Am I trying to promote SOA consulting services? I guess I am. You know why?

1. I make my living by delivering SOA consulting services

2. SOA is the most extensive offering I've ever seen in I.T. and quite frankly, I think people who try to go it alone - or by reading a Gartner report are going to fail.

It's that simple.

Monday, August 21, 2006

SOA Posers - Move Over

This is great news. For years it was just annoying evangelists. Now, the discussion has moved to the real questions. The troops are involved and they need to know how to do it. In many organizations this shift has already happened.

The media and sellers needs to catch up. The array of conferences and WebEx's on "Funding SOA" or "What is SOA?" need to come to an end. POSERS GET OUT OF THE WAY. It is time for the architects, designers, programmers and testers to actually DO IT.

Monday, July 31, 2006

SOA: Are you Hot or Not?

Discussing REST style services [NOT]

Building REST style services [HOT]

Debating the merits of UDDI [NOT]

Building out your service taxonomy [HOT]

I.T. doing SOA without business alignment [NOT]

Business driven SOA [HOT]

Implementing proprietary mediation tools [NOT]

Implementing standards based, federated mediation tools [HOT]

Designing one-off WSDL's [NOT]

Performing Service Oriented Information Engineering [HOT]

Debating the definition of SOA [NOT]

Creating a strategy and plan to take advantage of SOA [HOT]

Forcing last-gen object oriented methods on a SOA world [NOT]

Updating the SDLC to take advantage of SOA [HOT]

Web 2.0 as a means to create community [NOT]

Web 2.0 as SOA composition strategy [HOT]

SOA product company evangelists [NOT]

War stories from SOA trench

Blogging about SOA [NOT]

Doing SOA [HOT]

Saturday, July 15, 2006

Concern Oriented Architecture

I'll temporarily define COA as the emerging discipline of separating the technical concerns and identifying remedies to those concerns in the form of engines, components, domain specific languages and other repeatable solutions. COA focuses on the technical domains (presentation, data, business logic, etc.), identifying where they are divided and how they are recombined.

As an example, one enterprise I recently visited with had the following initiatives listed as part of their SOA program:

- Create a virtual data layer across all enterprise data sources

- Break out all process logic into a new layer

- Verify that integration logic doesn't exist inside of applications

- (more...)

The concepts of separating presentation logic from business logic or business logic from data logic is well known. However, more and more emphasis is now being placed on the separation of process logic and integration logic from the aforementioned elements. The science of separation of concerns is continuing to evolve. This is good stuff, but is it part of an SOA program?

The SOA efforts that I see often have more to do with COA than they have to do with SOA. Obviously, this is due to the attention and funding that SOA initiatives are receiving. I'm ok with mixing the dollars but what is clear to me is that many of these organizations aren't even aware that they're doing this. They just think it's all SOA.

And of course there's a reason why brilliant enterprise architects are taking SOA dollars and spending them on COA initiatives. They realize that the ROI of SOA is multiplied when it is combined with COA. So where do I land on all of this? I guess my bottom line is that the analysts, press and other influencers need to do a better job of separating these efforts (our own little separation of concerns). SOA and COA need to be managed as two different endeavors that have points of intersection. Failures in COA shouldn't be attributed to SOA or vice-versa, nor should successes.

Saturday, July 01, 2006

The Four Buckets of SOA Adoption

However, I'm noticing something really interesting about SOA adoption. You can put the adopters in 4 buckets:

1. Companies that don't do SOA and have no intention of doing it

2. Companies that don't do SOA but keep talking about it and have mastered the blame game

3. Companies that do SOA, but poorly

4. Companies that do SOA and reap huge benefits

Bucket #1

Bucker #1 companies are often busy with compliance, security, BI or other important initiatives. They had the common sense to not do SOA half-assed and have merely delayed the program.

Bucket #2

I recently attended an architects meeting of a certain industry. I witnessed 40 chief architects patting each other on the back about their lack of understanding around SOA. Only 1 in the group had done a roadmap, created a governance program and was demonstrating real results. The others made me want to puke; not because they were Bucket #1 people, but because they were bucket #2. I heard crap like, "SOA is just EAI - and we're already doing that..." - WHAT? What are you talking about?? I wanted to slap them. Honest to God. I witnessed the single biggest threat to SOA - incompetent, lazy, American, corporate architects whose skills were so dated they should go back to their 3270 terminals and program in REXX. I suddenly felt sorry for the CIO.

Bucket #3

These are the guys who have SOA programs, spend money and don't get anywhere. The problem here is almost always the same. Bucket #3 organizations have a whip-smart EA team who can rattle off every WS-* protocol (and usually do). The problem is that they live in EA land and have no ability to get application architects, developers, etc. on board. So what do they do? The EA's just keep marching blindly to the SOA drum. They act like their piece of crap SOA reference models justify their existence. They fail to actually 'realize' the models so when a line architect says, "Were thinking about doing this project using a service oriented style. You guys have been working on SOA for a couple of years; what do you have that our project can leverage?" The EA then hands them a completely non-actionable PowerPoint with a bunch of SOA diagrams that they modified from OASIS (which sucked in the first place). The recipient of this information looks at them with disgust, walks back to his project team and reports, "The jokers in EA still don't have anything we can use."

Bucket #4

Bucket #4 is the really interesting one. They succeed. The question is how? I've scratched a bald spot on my head and think I've got some answers. Here are the characteristics:

1. They have a single person ultimately responsible for SOA who leads a larger steering committee. This group also attacks organizational and process changes.

2. They created a roadmap with realistic deadlines.

3. They have an EA group where all of the members understand SOA and understand the practical needs of the application architects with regard to SOA.

4. The Application Portfolio Governance group has created a "SOA work stream" and selected new applications are sent down an SOA path.

5. The downstream teams (project managers, analysts, app. architects, designers, coders & testers) have all been trained in SOA.

Overall, I'm having a blast. As you can imagine I'm trying to focus my time on the winners. On occasion I'll take the time to let losers know that they're on a losing path, but that usually doesn't have much effect.

Friday, May 26, 2006

Tim O'Reilly Kills Web 2.0(sm)

1. Tim will return from vacation and shit in his drawers when he learns of the controversy

or

2. Tim will stand by his team and the term Web 2.0 will die.

All that I ask is that someone take a picture of Tim right after he finds out about the controversy and post it.

:-)

Tuesday, May 23, 2006

WiPro to compete with BPM ISV's

"Flow-briX is a complete BPM framework available in both J2EE and.NET platforms. With its unique customizability approach, we have been instrumental in offering best-in-class BPM /Workflow solutions to diversified market segments starting from Banking to Telecom, Media, Health Care and so on."

http://www.wipro.com/webpages/itservices/flowbrix/index.htm

Traditionally, this is a really bad move - I don't expect anything to be different this time.

Friday, May 12, 2006

SOA Testing

I recently had an interaction with Wayne Ariola at Parasoft, where he offered the following advice:

The key points from my point of view:

1) From a quality point of view the test driven process requires a SOA Aware testing framework. This means that the framework must be aware of the intermediary rich environment as well as the distributed nature elements required for the overall business process.

2) As the title of the presentation suggests, the onus of quality resides with development. Ensuring that new versions conform to published interfaces cannot be a QA function - in fact development must take an additional step to understand the impact of the change versus the regression suites as well as the overall business process. This business service orientation and the iteration/evolution of services will not be as convenient for QA to test as they are used to in an application centric environment.

3) Connection to the code. This is the big bang! Development must not only ensure that the service version is robust but must also tie the message layer changes to suite of code level regression tests.

The Parasoft SOA Quality Solution is built on an SOA Aware test framework that allows the user to exercise the message layer for security, reliability, performance and compliance as well as drive code level component tests from the message layer by automatically creating Junit test cases.

This got me thinking - what are the Golden Rules of SOA testing? Well, I think I've got my list which I intend to share at the Infoworld SOA event next week. Should be fun!

Thursday, May 11, 2006

The SOA Hype Cycle

IMHO, we are still early in the cycle - very early. We're no where near hitting the bottom. Prepare for more smack talk - more crapping on SOA, especially from vendors who don't have products to meet the market or enterprises who were too stupid to figure it out.

I am predicting that after rock bottom is hit, the uplift will be much quicker than most. The reason is simple: SOA has a network effect. When the service network hits critical mass the value of the network is too high to ignore. Most organizations aren't there yet - they have 10 to 50 services (and 11-51 clients). The reuse numbers will stink for some time. Like a network - you'll know when it hits critical mass - when it's too big to ignore.

If you're interested in the actual hype/activity curve, check out:

http://www.google.com/trends?q=soa

Tuesday, May 09, 2006

SOA Software Acquires Blue Titan

First, I'd like to congratulate Frank Martinez (aka, "Slim"). I'd also like to congratulate the people over at SOA Software for seeing and acting on this opportunity. Those who have worked with Blue Titan over the years realize that they have a multi-part value proposition: 1. The value of their current product suite, 2. The value of their vision. SOA Software acquired both product and vision.

The on-going consolidation in this market is good for everyone. We now have fewer vendors with better product suites enabling customers to buy integrated solutions. What remains unclear is who the big winners in the space will be; who will be the Mercator of the space? Who will be the Tibco?

SOA Software has assembled a set of product technologies that will enable them to compete on the multi-part RFP's that are floating around. However, their suite of tools now has overlap with many of the pure play vendors (SOA Mainframe Enablement, SOA Security Devices, SOA Mediation, SOA Monitoring, etc.) Recently, the game for small companies has been one of 'partnering in the ecosystem'. Guys like Systinet, Actional, Parasoft and GT Software have shown their ability to pull together to promote their individual and collective causes. However, the rapid consolidation is diminishing the ability for the small companies to "eco-partner" due to their new parent companies having overlap in their portfolio (Mercury, Progress, BEA, etc.) We are close to reaching the point where *enough* of the SOA mass has moved from pure play to conglomerate. When this happens the eco-partner system falls apart due to lack of critical mass and value proposition. This sets the stage for phase 2 of the battle - the battle of the conglomerates.

With the acquisition of Blue Titan, SOA Software may have bought some industrial strength software - but let's get real, what they really bought was a mean general to fight the real war.

Friday, April 21, 2006

*-First Development

And once again, I had the same-ole discussion about how in SOA we would be creating interfaces completely separate from implementation – often with different groups of people – in different parts of the world – leveraging different reusable message components that would be related back to services front-ending multiple implementation platforms. He got it. ‘Microsoft First’ won’t work in an enterprise setting.

This discussion prompted me to ask some of our consultants if they were having good results with ‘WSDL First’ and the responses were positive. However, one consultant communicated that he has been pushing this concept into the requirements gathering phase. He called it, ‘Spreadsheet First’. Most I.T. Business Analysts will not be comfortable with creating WSDL’s and we continue to have an ‘analysis impedance mismatch’ between ‘legacy, silo-oriented Use Cases’ and ‘client cases / service cases’. His solution was to use a simple spreadsheet to layout the operations, messages and constraints. He would then use the spreadsheet as input in design phase, where he’d bundle the operations into port types and services and translate the field types.

One of the things I really like about SOA is having the service as unit of work. A service is right-sized for requirements gathering and specification. It is also right-sized for ‘Test Driven SOA’, but we’ll save that for another day…

Sunday, April 09, 2006

Is Ruby Ready for the Enterprise?

http://www.momentumsi.com/about/accessarchive.html

Saturday, April 01, 2006

You're so Enterprise...

He goes on to say, "Thus, allow me to make this perfectly clear: I would be as happy as a clam never to write a single line of software that guys like James McGovern found worthy of The Enterprise."

Recently, I asked some EA's to comment on this line of thinking. Putting the immature insults aside, were there valid points to be taken away?

Here is what I heard:

1. First, we can't just lump RSS, REST, RoR into the same bucket - each technology must be evaluated independently.

2. RSS can be viewed as an overlay network and has already proven to scale to the needs of the largest enterprises in the world. However, groups like MS have identified shortcomings and made feature extensions. This pattern will likely continue.

3. REST, as the principle foundation of the Web, has proven that it too can scale - but the areas where it has proven to scale the best are related to the movement of unstructured HTML documents using a constrained set of verbs. Others will note the success of RESTian API's in leading Web companies like Amazon. Most EA's were quick to acknowledge that the WS-stack is fat and that the lack of ubiquitous deployment was creating a larger than usual demand for simple RESTian style development. The delay of Microsoft Vista didn't help. EA's remain concerned that the lack of published resolutions to the non-functional concerns (security, reliability, transactional integrity) for RESTian projects will lead to either one-off implementations across the global enterprise leading to a deferred mediation problem, or alternatively might lead to a set of RESTian specifications that closely resembled the WS-specs, as they both attempted to satisfy the same use cases. The conclusions was that RESTian principles will make significant waves in the enterprise because of the solid foundation and ability to add more 'enterprise crap' on it if they need to.

4. Ruby on Rails - Generally speaking most of the EA's I've spoken with understood the power to quickly create web apps with RoR. One was quick to note, "if time to market was my primary concern, we'd use Cold Fusion, rarely is that the case." It was clear that many EA's were uneasy talking about RoR; they lacked data and were turned off by the comments that David Heinemeier Hansson had made, suggesting that Ruby was definitely interesting but were unsure if the RoR team was aware of the political nature of the enterprise - and the need to satisfy unique requirements. Most EA's were interested in finding a place to do 'departmental RoR' and kicking the tires. They were also interested in the long term potential of Ruby, RoR aside.

---- Part II

In regard to the comment that Dare had made, "If you are building distributed applications for your business, you really need to ask yourself what is so complex about the problems that you have to solve that makes it require more complex solutions than those that are working on a global scale on the World Wide Web today." I tried to have a conversation with several architects on this subject and we immediately ran into a problem. We were trying to compare and contrast a typical enterprise application with one like Microsoft Live. Not knowing the MS Live architecture we attempted to 'best guess' what it might look like:

- An advanced presentation layer, probably with an advance portal mechanism

- Some kind of mechanism to facilitate internationalization

- A highly scalable 'logic layer'

- A responsive data store (cached, but probably not transactional)

- A traditional row of web servers / maybe Akamai thing thrown in

- Some sort of user authentication / access control mechanism

- A load balancing mechanism

- Some kind of federated token mechanism to other MS properties

- An outward facing API

- Some information was syndicated via RSS

- The bulk of the code was done is some OO language like Java or C#

- Modularity and encapsulation was encouraged; loose coupling when appropriate

- Some kind of systems management and monitoring

- Assuming that we are capturing any sensitive information, an on the wire encryption mechanism

- We guessed that many of the technologies that the team used were dictated to them: Let's just say they didn't use Java and BEA AquaLogic.

- We also guessed that some of the typical stuff didn't make their requirements list (regulatory & compliance issues, interfacing with CICS, TPF, etc., interfacing with batch systems, interfacing with CORBA or DCE, hot swapping business rules, guaranteed SLA's, ability to monitor state of a business process, etc.)

At the end of the day - we were scratching our heads. We DON'T know the MS Live architecture - but we've got a pretty good guess on what it looks like - and ya know what? According to our mocked up version, it looked like all of our 'Enterprise Crap'.

So, in response to Dare's question of what is so much more complex about 'enterprise' over 'web', our response was "not much, the usual compliance and legacy stuff". However, we now pose a new question to Dare:

What is so much more simple about your architecture than ours?

Saturday, March 25, 2006

My Enterprise Makes Your Silly Product Look Like A Grain of Sand

The funny thing about a lot of the people who claim to be 'Enterprise Architects' is that I've come to realize that they tend to seek complex solutions to relatively simple problems. How else do you explain the fact that web sites that serve millions of people a day and do billions of dollars in business a year like Amazon and Yahoo are using scripting languages like PHP and approaches based on REST to solve the problem of building distributed applications while you see these 'enterprise architect' telling us that you need complex WS-* technologies and expensive toolkits to build distributed applications for your business which has less issues to deal with than the Amazons and Yahoos of this world?

Dare goes on to say:

I was chatting with Dion Hinchcliffe at the Microsoft SPARK workshop this weekend and I asked him who the audience was for his blog on Web 2.0. He mentioned that he gets thousands of readers who are enterprise developers working for government agencies and businesses who see all the success that Web companies are having with simple technologies like RSS and RESTful web services while they have difficulty implementing SOAs in their enterprises for a smaller audience than these web sites. The lesson here is that all this complexity being pushed by so-called enterprise architects, software vendors and big 5 consulting companies is bullshit. If you are building distributed applications for your business, you really need to ask yourself what is so complex about the problems that you have to solve that makes it require more complex solutions than those that are working on a global scale on the World Wide Web today.

I guess that this gets to my point. Most people who have never seen a large enterprise architecture have no concept of what it is. I'm not trying to imply that Dare is one of them, but stating the RSS and REST are cures to enterprise grade problems seems a bit myopic. So, to Dare's point - why is it more complex to do 'enterprise grade' over 'web grade'? This is the million dollar (or perhaps billion dollar) question.

Of course I have my views, but what are yours? Send me your thoughts jschneider-at-momentumsi.com and I'll recompile for publishing. My gut tells me that there is a pretty good argument to be had here - if Dare is right, I want to be the first to advise my customers. If he's wrong, well - I want to advise my customer of that too!

Friday, March 24, 2006

The Web is the Application

http://www.google.com/finance?q=WEBM and realized that the what used to be a static html page was now a highly interactive application. Of course, I am referencing all of the AJAX functionality that has been added. In many of our current AJAX apps, the focus has been to convert classic applications like email, word processing and spreadsheets into Web 2.0 apps.

The conversion of 'traditional' web pages to interactive apps deserves special attention. This is the science of blending information based on context, degrees of separation and providing the ability to quickly pivot the view. It seems as though there is a point where a web page is no longer really a web page but rather an contextually bound application. The art of blending information, informational views, navigation and functionality will likely be a critical talent of the next gen application developer.

Wednesday, March 22, 2006

Redneck SOA

http://schneider.blogspot.com/2002_12_01_schneider_archive.html#90283350

Sunday, March 19, 2006

Client Service Computing

A second term that I've been using is "Service Network Computing" which focuses on the plumbing that makes hokey Web 2.0 applications capable of performing 'business critical' applications.

A View of Competing Interests and Concerns

We always have to balance the needs of the business with the needs of the user. Our next generation architectures will be funded to enable next generation products, services, business models and value chains. And both User and Business will have architectural requirements which must be met. Perhaps taking a secondary seat is the needs of the developer. The hokey use of DHTML, AJAX, etc. perhaps is the most obvious indicator - we're jumping though hoops to meet the needs of users and business - IMHO, the way it should be until we get next-generation tooling.

Web 3.0 Maturity Model

The conversation has been a bit chaotic. It is clear that we are covering a lot of ground - perhaps too much ground. Architecting the future using terminology that is not baked isn't easy. All of the participants come from different backgrounds and have different views of what SOA is - what SaaS is - what Web 2.0 is. And no, we weren't able to find the silver bullet. I think we did make progress in finding some common ground about what is 'new' and 'different'.

The conversation has inspired a few thoughts around the aforementioned subjects. Again - no answers - perhaps more questions.

One of the discussion topics was Web 2.0 - although there was no conclusion on the tenets/philosophies of Web 2.0 a handful of attributes were repeated: User Centric, Self Service, Collaborative, Participative, Communal Organization, Lately Bound Composition, etc.

This led to yet another discussion about lack of 'mission critical' capabilities of the Web 2.0 and started the discussion of Web 3.0 - perhaps focusing more on the needs of the corporation. I put together the following illustration as a way to frame a discussion around the Web X.0:

What are the technical barriers?

What were the technical enablers?

What new capability did this enable the user or business?

Tuesday, February 14, 2006

Web Me Too, Oh...

Yes, I failed to fill out the proper paperwork and declare myself an official Web 2.0 guy prior to the November 2005 deadline. Now I must wear that humiliating "me too" badge.

I've been told that I'm not allowed to skip directly to Web 3.0 due to some amendment that Tim O'Reilly put into law. Apparently, Web 3.0 will be released but only at a secret conference of uber geeks that write mean things about Microsoft and donate to EFF.

In the meantime - I too am attending SPARK. Let's get real... we've got a major shift going on in the presentation layer, collaboration, content management/distribution and self-service programming. The discussion has shifted from REST, WS-*, etc to client consumption models, combining multiple services, utilizing markdown languages and light weight scripting languages.

It is very clear to me that the Web 2.0 movement will drive the demand for SOA. Perhaps more importantly, Web 2.0 will mandate the technical requirements for SOA. The Web 2.0 manifesto is the requirements document for SOA 2.0.

Saturday, February 04, 2006

More SOA Consolidation...

Here is the updated SOA acquisition list...

Now, for a prediction - we'll see one more acquisition in February and two more in March. They'll be in three different spaces, one in each: "Run-time Monitor & Mediate", "Governance" and "Registry/Repository".

Tuesday, January 31, 2006

The Sheep that Shit Coleslaw

Peers

In peer-oriented systems, a node is a considered a 'peer' when it is both a consumer AND a producer. In a network, it is advantageous to have nodes not only consume value from the network, but also produce value back to the network. The more producers on a network, the more valuable the network is for consumers.

Non-Recombinant Systems

Traditional applications were often designed whereby the ultimate consumer was hard-coded at design time, and that consumer would be a human - and the vehicle would be some kind of graphical rendering. This is most likely a tradition held over from mainframe and client/server days. Today, many systems continue to fall victim to the same design center. Architects continue to assume that the data or functions would never be needed by anyone but a human and that whatever tool they built would be the final tool for consumption. In essence, the designers failed to anticipate future consumption scenarios that may have been out of scope of the 'Use Cases' that they built their system for.

Recombinant Systems

When an application is designed so that its functions or data can be discovered and used, we say that the application is 'highly recombinant'. This means that the services that are offered can:

1. Easily be found (often on the screen that used them) and

2. Easily be called (the barrier to usage is low).

Applications that are highly recombinant offer the potential for reuse, or sharing. These applications do not assume to know the future uses of the functions or data. The fundamental notion is that the true uses of the functions/data will not be known until after the system is put into production.

As we move into a service oriented world, we have the potential of serving up two interfaces: one for human consumption (the screen) and one for machine consumption (the service).

The key is to make sure that the people who use the systems can easily gain access to the services. As an example, it is common for Blogs to identify their feeds via the classic RSS image:

. Just as easily, we can tag our more structured SOAP services with the WSDL image:

. Just as easily, we can tag our more structured SOAP services with the WSDL image: YOU WILL EAT CARROTS!

Architect - "You will eat carrots!"

User - "But I want coleslaw..."

Architect - "You will eat cabbage!"

User - "But I want coleslaw..."

Enterprises and SaaS vendors must not only acknowledge that we are moving into an era of recombinant platforms but they must also take the necessary steps to enable the mashups, remixes, composite applications or whatever you want to call them. The future of software is about the creation and utilization of building blocks. It is about letting our users play with their Lego's. It is about allowing them to make coleslaw from their carrots and cabbage. It is about productivity and creativity. We do it for a few reasons: it lowers new software development costs, significantly reduces maintenance expenses, while simultaneously gives the users what they wanted.

You can have your carrots and coleslaw too.

Saturday, January 28, 2006

SOA Pilot Program

IMPLEMENTING A SUCCESSFUL SOA PILOT PROGRAM... A WEBINAR ON FEBRUARY 2nd, 2006 at 2pm EST/11am PST

As you work to extend IT capabilities utilizing SOA, a key for many organizations is a pilot program which enables the greater understanding and methodological deployment of Web services and SOA around a structured initiative. Join us as executives from MomentumSI and Actional will present best practices for selecting and implementing a successful SOA Pilot:

- Determining the Overall Goals of an SOA Initiative

- Criteria for a SOA Pilot

- Case Study of Successful Pilot Program

- Nexts Steps to an Enterprise SOA

This Presentation clearly identifies the axioms and best practices that underlie achieving SOA objectives...

WHO SHOULD ATTEND: IT Executives, Enterprise Architects, Business Line Manager and Project Managers who are using or planning to use Web services or creating an SOA! Webcast will start promptly on February 2nd at 2:00pm EST/11am PST.

REGISTER HERE

Wednesday, January 25, 2006

The Value of Reuse in SOA

See:

http://www.momentumsi.com/SOA_Reuse.html

Sunday, January 22, 2006

SOA Contest - Early Results

I also published the names of a few Microsoft products that likely have WSDL's.

Come on Microsoft - we're on year 5. Surely you've got more than 4 WSDL's???

Thursday, January 19, 2006

SOA Consolidation

We've noticed that the 'acquisition trail' is becoming more and more complicated, so we've put together a cheat-sheet to help you out:

SOA Consolidation

And congratulations to the teams at Actional and Systinet!

Sunday, January 15, 2006

Microsoft SOA Contest

MomentumSI is sponsoring a contest to see if anyone can locate the Microsoft WSDL's! Yes - anyone can win an SOA Tee Shirt, even Ray Ozzie!

All you have to do is:

- Find a supported WSDL from ANY current Microsoft product (hosted or shrink-wrap)

- Send me (jschneider at momentumsi.com) the name of the product and the WSDL as well as where you found it (URL, etc.)

Rules:

- The product has to be from Microsoft, the most current version and supported

- You can send as many WSDL's as you want (but you'll only win once)

- Only the first person to send me the WSDL wins

- I only have about 100 tee shirts left - when I'm out of tee shirts the contest ends.

- Don't send anything confidential.

When "The Great Microsoft WSDL Hunt" is over, I'll publish the results.

SOA-WS and CORBA

Differences:

- ALL major infrastructure vendors are supporting the Web services stack - do you remember when Microsoft threw CORBA under the bus?

- ALL major application vendors are moving their ERP/CRM/etc. products to a service-based platform; how many application vendors redesigned their products for CORBA?

- THE major desktop productivity suite, Microsoft Office, will be capable of locating, publishing and consuming web services; I don't remember office productivity doing much with CORBA...

- SOA-WS has put significant emphasis on long-running and asynchronous programming models, enabling B2B activity. Did CORBA do much in B2B?

- SOA-WS has put much more emphasis on network routing - enabling 'virtual services' and in-the-network transformations, mitigating the 'versioning problem'. These are lessons learned from the CORBA days...

I'll leave the discussion on IDL/IIOP/Common Facilities/etc. to the experts...

Legacy Architects and SOA

I often find myself attending meetings with 'legacy architects'. You know the kind - the guys that love to remind you that 'nothing is new' - hence, we shouldn't expect any new results.

Thoughts...

1. 'Legacy Architects' or 'Last Gen Architects', if you prefer, remain quite ignorant about SOA-WS.

2. In a paradigm shift, 'Pessimistic Architects' have a much higher likelihood of failure than 'Optimistic Architects'.

I don't mind ignorant architects - however I have a true disdain for perpetually ignorant architects - the kind who refuse to learn what they don't know. These people can kill an SOA program. Find them - remove them if you can, contain them if you can't.

Pessimistic architects are good; perpetually pessimistic architects are bad. If the people leading your SOA program don't believe in SOA - you are likely doomed. Understanding the limitations of a computing model doesn't take a great architect - quite frankly, any half-assed architect can find holes in any model. Great architects are the ones that mitigate holes and while getting the entire organization completely pumped up about making the transition. Great architects push the acceptance over the chasm - past critical mass. They will have plenty of arrows in their back - mostly shot from the bows of 'perpetually ignorant architects'.

Saturday, January 07, 2006

Web Service Architecture Tenets

What are the goals? What are the rules?

These are tough questions that many people in the industry have attempted to answer. Some time back, Frank Martinez and I wrote our view:

Web Service Architecture Tenets

I'd love to hear your feedback.

Monday, January 02, 2006

My Favorite Prediction

4. 'Enterprise' follows 'legacy' to the standard dictionary of insults favored by software creators and users. Enterprise software vendors' costs will continue to rise while the quality of their software continues to drop. There will be a revolt by the people who use the software (they want simple, slim, easy-to-use tools) against the people who buy the software (they want a fat feature list that's dressed to impress). This will cause Enterprise vendors to begin hemorrhaging customers to simpler, lower-cost solutions that do 80% of what their customers really need (the remaining 20% won't justify the 10x -100x cost of the higher priced enterprise software solutions). By the end of 2006 it will be written that Enterprise means bulky, expensive, dated, and golf.

What David identifies is the growing distinction between classes of applications in the enterprise. Many, if not most, applications in the enterprise DON'T NEED TO BE ENTERPRISE GRADE. The function that they perform - isn't that important, doesn't require .99999 up-time, DOD-grade security, etc.

But let's get serious - Dell isn't going to be running its order management system from some Web 2.0 SaaS startup - nor will e-Trade be hosting customer financial data in some "non-enterprise" way. People who don't do enterprise development don't understand enterprise development; there are a million blogs out there to prove this. An enterprise can lose more money in one day because of a system outage than the combined revenue of every SaaS / Web 2.0 company combined.

Before 'enterprise Java', the term 'enterprise' referred to the scope of the deployment - not the architecture. 'Mission Critical' was the term we used to indicate a high degree of architectural integrity. David (and many others) are continuing to restate the obvious: we need multiple levels of architecture: light, medium, heavy. We need architectural profiles for each level. Our enterprise architecture teams MUST NOT force one design center for all application classes.

Is there a place for RoR, Spring, RSS, etc. in the enterprise? You bet. Will light weight solutions eat away at their heavy-weight counterparts? Yep. Is the 'enterprise' grade solution going to mean "bulky, expensive and dated" in 2006? Well, yea - it has meant that for years!

Sunday, January 01, 2006

My 2006 Predictions

1. Virtually no enterprise software companies will 'go public' in 2006.

2. U.S. based venture capital will continue a downward trend with enterprise software leading the pack.

3. An abnormally high number of small ISV's will fail to raise their next round of funding. However, their revenue will allow them to crawl forward forcing them to ask the 'startup euthanasia' question.

4. On average, large enterprise software companies market valuation will stagnate; small and medium sized companies will mostly go down except for brief periods of 'acquisition hype'.

5. The number of 'Software as a Service' providers increase substantially but find low revenue in 2006.

6. The competition between Big-5 and Big-I (Indian) consulting increases significantly setting up a 2007 consolidation scenario.

7. The Business Process Platform becomes the accepted standard as the foundation for enterprise software.

8. SOA continues as a major trend however more attention is focused on 'what to service orient' rather than 'how to service orient'.

9. Google continues to release new products in very short time frames. Microsoft takes notice but does not act.

10. Salesforce.com stock price drops by 50%.