When asked, "Will Amazon Support Linux Containers?" Raj comments, "Would love it. We may see a type of instance which allows containers on it. You will have to take the whole machine and not just a container on it. That way AWS will not have to bother about maintaining the host OS. Given the complexities I think it will be a lower priority for Amazon and as it may be financially counterproductive; they may never do it."

Delivering Business Services through modern practices and technologies. -- Cloud, DevOps and As-a-Service.

Saturday, December 03, 2011

Will Amazon Support Linux Containers?

When asked, "Will Amazon Support Linux Containers?" Raj comments, "Would love it. We may see a type of instance which allows containers on it. You will have to take the whole machine and not just a container on it. That way AWS will not have to bother about maintaining the host OS. Given the complexities I think it will be a lower priority for Amazon and as it may be financially counterproductive; they may never do it."

Tuesday, November 22, 2011

Is Cloud Foundry a PaaS?

The obvious answer would seem to be "yes" - after all, VMware told us it's a PaaS:

That should be the end of it, right? For some reason, when I hear "as-a-Service", I expect a "service" - as in Service Oriented. I don't think that's too much to ask. For example, when Amazon released their relational data service, they offered me a published service interface:

https://rds.amazonaws.com/doc/2010-07-28/AmazonRDSv4.wsdl

I know there are people who hate SOAP, WS-*, WSDL, etc. - that's cool, to each their own. If you prefer, use the RESTful API: http://docs.amazonwebservices.com/AmazonRDS/latest/APIReference/

Note that the service interface IS NOT the same as the interface of the underlying component (MySQL, Oracle, etc.), as those are exposed separately.

Back to my question - is Cloud Foundry a PaaS?

If so, can someone point me to the WSDL's, RESTful interfaces, etc?

Will those interfaces be submitted to DMTF, OASIS or another standards body?

Alternatively, is it merely a platform substrate that ties together multiple server-side technologies (similar to JBoss or WebSphere)?

Will cultural pushback kill private clouds?

I tend to agree with the premise that the enterprise will have difficulties in adopting private cloud but not for the reasons the authors noted. The IaaS & PaaS software is available. Vendors are now offering to manage your private cloud in an outsourced manner. More often than not, companies are educated on cloud and "get it". They have one group of people who create, extend and support the cloud(s). They have another group who use it to create business solutions. It's a simple consumer & provider relationship.

Traditionally, there are three ways things get done in Enteprise IT:

1. The CIO says "get'er done" (and writes a check)

2. A smart business/IT person uses program funds to sneak in a new technology (and shows success)

3. Geeks on the floor just go and do it.

With the number of downloads of open source stacks like OpenStack and Eucalyptus, it is apparent that model #3 is getting some traction. My gut tells me that the #2 guys are just pushing their stuff to the public cloud (will beg forgiveness - not asking for permission). On #1, many CIO's are hopeful that they can just 'extend their VMware' play - while more aggressive CIO's are looking to the next generation cloud vendors to provide something that matches the public cloud features in a more direct manner.

There are adoption issues in the enterprise. However, it's the same old reasons. Fat org-charts aren't going away and will not be the life or death of private cloud. In my opinion, we need the CIO's to make bold statements on switching to an internal/external cloud operating model. Transformation isn't easy. And telling the CIO that they need to fire a bunch of managers in order to look more like a cloud provider is silly advice and a complete non-starter.

Friday, August 12, 2011

Measuring Availability of Cloud Systems

"Recall that the availability of a group of components is the product of all of the individual component availabilities. For example, the overall availability of 5 components, each with 99 percent availability, is: 0.99 X 0.99 X 0.99 X 0.99 X 0.99 = 95 percent."

Thursday, August 11, 2011

OpenShift: Is it really PaaS?

- They're pre-installed in clustered or replicated manner?

- They're monitored out of the box?

- Will it auto-scale based on the monitoring data and predefined thresholds? (both up and down?)

- They have a data backup / restore facility as part of the as-a-service offering?

- The backup / restore are as-a-service?

- The backup / restore use a job scheduling system that's available as-a-service?

- The backup / restore use an object storage system that has cross data center replication?

Tuesday, April 26, 2011

Private Cloud Provisioning Templates

Sunday, April 24, 2011

Private Cloud Provisioning & Configuration

Tuesday, April 05, 2011

Are Enterprise Architects Intimidated by the Cloud?

Monday, April 04, 2011

Cloud.com offers Amazon API

"CloudBridge provides a compatibility layer for CloudStack cloud computing software that tools designed for Amazon Web Services with CloudStack.

The CloudBridge is a server process that runs as an adjunct to the CloudStack. The CloudBridge provides an Amazon EC2 compatible API via both SOAP and REST web services."

Saturday, April 02, 2011

The commoditization of scalability

Wednesday, March 16, 2011

Providing Cloud Service Tiers

In the early days of cloud computing emphasis was placed on 'one size fits all'. However, as our delivery capabilities have increased, we're now able to deliver more product variations where some products provide the same function (e.g., storage) but deliver better performance, availability, recovery, etc. and are priced higher. I.T. must assume that some applications are business critical while others are not. Forcing users to pay for the same class of service across the spectrum is not a viable option. We've spent a good deal of time analyzing various cloud configurations, and can now deliver tiered classes of services in our private clouds.

Reviewing trials, tribulations and successes in implementing cloud solutions, one can separate tiers of cloud services into two categories: 1) higher throughput elastic networking; or 2) higher throughput storage. We leave the third (more CPU) out of this discussion because it generally boils down to 'more machines,' whereas storage and networking span all machines.

Higher network throughput raises complex issues regarding how one structures networks – VLAN or L2 isolation, shared segments and others. Those complexities, and related costs, increase dramatically when adding multiple speed NICS and switches, for instance 10GBase-T, NIC teaming and other such facilities. We will delve into all of that in a future post.

Tiered Storage on Private Cloud

Where tiered storage classes are at issue, cost and complexity is not such a dramatic barrier, unless we include a mix of network and storage (i.e., iSCSI storage tiers). For the sake of simplicity in discussion, let's ignore that and break the areas of tiered interest into: 1) elastic block storage (“EBS”); 2) cached virtual machine images; 3) running virtual machine (“VM”) images. In the MomentumSI private cloud, we've implemented multiple tiers of storage services by adding solid state drives (SSD) drives to each of these areas, but doing so requires matching the nature of the storage usage with the location of the physical drives.

Consider implementing EBS via higher speed SSD drives. Because EBS volumes avail themselves over network channels to remain attachable to various VMs, unless very high speed networks carry the drive signaling and data, a lower speed network would likely not allow dramatic speed improvements normally associated with SSD. Whether one uses ATA over Ethernet (AoE), iSCSI, NFS, or other models to project storage across the VM fabric, even standard SATA II drives, under load could overload a one-gigabit Ethernet segment. However, by exposing EBS volumes on their own 10Gbe network segments, EBS traffic stands a much better chance of not overloading the network. For instance, at MSI we create a second tier of EBS service by mounting SSD on the mount points under which volumes will exist – e.g., /var/lib/eucalyptus/volumes, by default, on a Eucalyptus storage controller. Doing so gives users of EBS volumes the option of paying more for 'faster drives.'

While EBS gives users of cloud storage a higher tier of user storage, the cloud operations also represent a point of optimization, thus tiered service. The goal is to optimize the creation of images, and to spin them up faster. Two particular operations extract significant disk activity in cloud implementation. First, caching VM images on hypervisor mount points. Consider Eucalyptus, which stores copies of kernels, ramdisks (initrd), and Eucalyptus Machine Images (“EMI”) files on a (usually) local drive at the Node Controllers (“NC”). One could also store EMIs on an iSCSI, AoE or NFS, but the same discussion as that regarding EBS applies (apply fast networking with fast drives). The key to the EMI cache is not so much about fast storage (writes), rather rapid reads. For each running instance of an EMI (i.e., a VM), the NC creates a copy of the cached EMI, and uses that copy for spinning up the VM. Therefore, what we desire is very fast reads from the EMI cache, with very fast writes to the running EMI store. Clearly that does not happen if the same drive spindle and head carry both operations.

In our labs, we use two drives to support the higher speed cloud tier operations: one for the cache and one for the running VM store. However, to get a Eucalyptus NC, for instance, to use those drives in the most optimal fashion, we must direct the reads and writes to different disks,– one drive (disk1) dedicated to cache, and one drive (disk2) dedicated to writing/running VM images. Continuing with Eucalyptus as the example setup (though other cloud controllers show similar traits), the NC will, by default, store the EMI cache and VM images on the same drive -- precisely what we don't want for higher tiers of services.

By default, Eucalyptus NCs store running VMs on the mount point /usr/local/eucalyptus/???, where ??? represents a cloud user name. The NC also stores cached EMI files on /usr/local/eucalyptus/eucalyptus/cache -– clearly within the same directory tree. Therefore, unless one mounts another drive (partition, AoE or iSCSI drive, etc.) on /usr/local/eucalyptus/eucalyptus/cache, the NC will create all running images by copying from the EMI cache to the run-space area (/usr/local/eucalyptus/???) on the same drive. That causes significant delays in creating and spinning up VMs. The simple solution: mount one SSD drive on /usr/local/eucalyptus, and then mount a second SSD drive on /usr/local/eucalyptus/eucalyptus/cache. A cluster of Eucalyptus NCs could share the entire SSD 'cache' drive by exposing it as an NFS mount that all NCs mount at /usr/local/eucalyptus/eucalyptus/cache. Consider that the cloud may write an EMI to the cache, due to a request to start a new VM on one node controller, yet another NC might attempt to read that EMI before the cached write completes, due to a second request to spin up that EMI (not an uncommon scenario). There exist a number of ways to solve that problem.

The gist here: by placing SSD drives at strategic points in a cloud, we can create two forms of higher tiered storage services: 1) higher speed EBS volumes; and 2) faster spin-up time. Both create valid billing points, and both can exist together, or separately in different hypervisor clusters. This capability is now available via our Eucalyptus Consulting Services and will soon be available for vCloud Director.

Next up – VLAN, L2, and others for tiered network services.

Monday, March 14, 2011

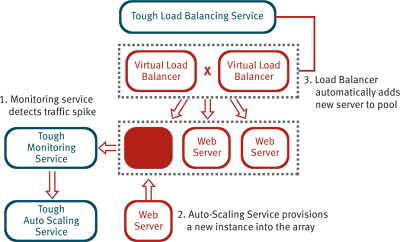

Auto Scaling as a Service

Wednesday, March 09, 2011

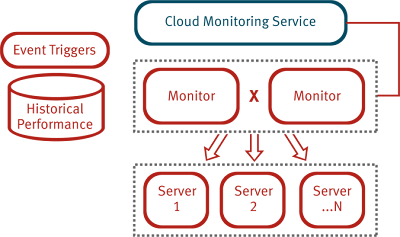

Non-Invasive Cloud Monitoring as a Service

The Tough Cloud Monitoring solution is our next generation offering targeting virtualized workloads, as well as PaaS services, housed in either traditional data centers or private cloud environments.

The Tough Cloud Monitoring solution is our next generation offering targeting virtualized workloads, as well as PaaS services, housed in either traditional data centers or private cloud environments. Tuesday, March 08, 2011

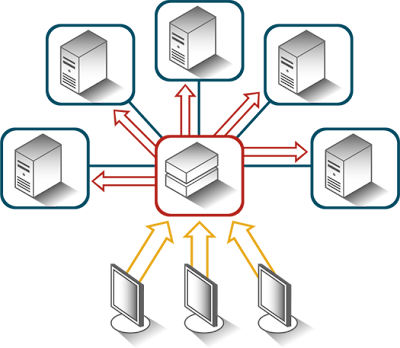

Tough Load Balancing as a Service

Last week, MomentumSI announced the availability of our Tough Load Balancing Service along with a Cloud Monitoring and Auto Scaling solution.

Last week, MomentumSI announced the availability of our Tough Load Balancing Service along with a Cloud Monitoring and Auto Scaling solution. Wednesday, March 02, 2011

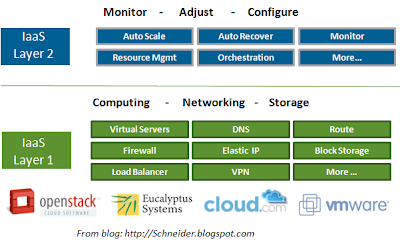

Separating IaaS into Two Layers

Some people call the first layer, "Hardware-as-a-Service". It primarily focuses on the 'virtualization' of hardware enabling better manipulation by the upper layers. This was the core proposition of the original EC2. There are some great vendors in this space like Eucalyptus, Cloud.com and VMware. Cool projects are also emerging out of OpenStack which many of the aforementioned companies hope to adopt and extend.