Last week, MomentumSI announced the availability of our Tough Load Balancing Service along with a Cloud Monitoring and Auto Scaling solution.

Last week, MomentumSI announced the availability of our Tough Load Balancing Service along with a Cloud Monitoring and Auto Scaling solution. The concept of load balancing has been around for decades - so nothing too new there. However, applying the 'as a Service' model to load balancing remains a fairly new concept, especially in the traditional data center. Public cloud providers like Amazon have offered a similar function for the last couple of years and have seen significant interest in their offering. We believe LB-aaS offers an equivalent productivity boost to traditional data centers, private cloud customers or service providers who want to extend their current offerings.

Our design goals for the solution were fairly simple - and we believe we met each of them:

1. Don't interfere with the capability of the underlying load balancer

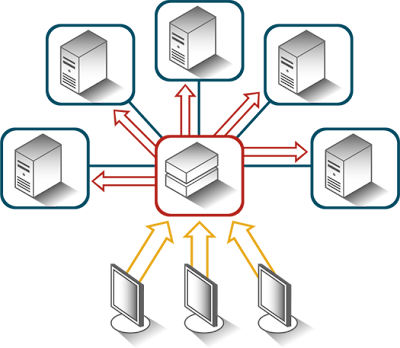

The LB-aaS solution wraps traditional load balancers (currently, software based only) to enable rapid provisioning, life-cycle management, configuration and high availability pairing. All of these functions run outside of the ingress / egress path of the data. This means you do not incur additional latency in the actual balancing. Also, our design enables us to snap in various load balancer implementations. Our current solution binds to HAProxy and Pound for SSL termination. Based on customer demand, we anticipate adding additional providers (e.g, F5, Zeus, etc.) Our goal is to nail the "as-a-Service" aspect of the problem and to be able to easily swap in the right load balancer implementation for our customers specific needs.

2. Make life easier for the user

I was recently as one of my enterprise customers speaking with an I.T. program manager. She commented that her team was in a holding pattern while they ordered a new load balancer for their application. Her best guess was that it was going to take about 5 weeks to get through their internal procurement cycle and then another 2-3 weeks to get it queued up for the I.T. operations people to get around to installing, configuring and testing it out. When I told her about our LB-aaS solution (2-3 minutes to provision and another whopping 5-10 minutes to configure), she just started laughing... and made a comment about necessity being the mother of all invention.

3. Deliver an open API

Delivering an open API was an easy decision for us. We went with the Amazon Web Service Elastic Load Balancer API. We maintained compatibility with their WSDL as well as providing command line capabilities and the use of their AWS Query protocol. As the ecosystem around AWS continues to grow, we want companies to be able to immediately plug into our software without code-level changes.

4. Don't cause pain down the road

We've seen some companies put software based load balancers into their VM image templates. We see this as last-years stop-gap solution. The lack of device-specific life-cycle management leads to configuration drift and no service-oriented interface means you can't use the load balancer as part of an integrated solution pattern (like auto-scale). Let's face it, the world is moving to an 'as a service' model for some good reasons.

Again, the Tough Load Balancing Service is available today and can easily work in current data centers, private clouds or service providers.

No comments:

Post a Comment